Artificial intelligence (AI) is changing every industry and growing faster and smarter each day.

It uses data to teach challenging tasks to computers using methods like machine learning (ML) and natural language processing (NLP).

Large language models (LLMs) are a good example. They use NLP to read and write text, and tools like Claude or Midjourney AI use these methods. These LLMs also use AI to create new content.

LLMs can understand and make natural language. A key method is few-shot prompting, which uses a small set of examples to help LLMs perform specific tasks better.

This method helps LLMs give better results without lots of pre-programming.

This article explores few-shot prompting, a powerful technique that enables AI models to learn tasks from just a handful of examples. We’ll examine its significance, analyze practical examples, and showcase how businesses leverage this approach to drive innovation.

What is few-shot prompting?

Few-shot prompting is an advanced technique in natural language processing that leverages the vast knowledge base of large language models (LLMs) to perform specific tasks with minimal examples.

This approach allows AI systems to adapt to new contexts or requirements without extensive retraining.

Few-shot prompting guides the LLM in understanding the desired output format and style by providing a small set of demonstrative examples within the prompt. This enables it to generate highly relevant and tailored responses.

This method bridges the gap between the LLM’s broad understanding of language and the specific needs of a given task, making it a powerful tool for rapidly deploying AI solutions across diverse applications.

However, LLMs can give very different results from text prompts. This is thanks to their NLP skills. If written well, this lets them understand inputs in context.

LLMs can do new tasks with just a few examples when prompts are well-made.

Why is few-shot prompting important?

Few-shot prompting is changing how we use AI. It makes AI smarter and more useful in many ways.

The global market for this skill was worth $213 million in 2023 and may reach $2.5 trillion by 2032. This shows how important few-shot prompting is becoming in the AI world.

AI doesn’t need as much data or training to perform new tasks, so companies can use AI faster and for more jobs.

This method also helps AI adapt because it can learn new things without starting from scratch.

This is great for real-world problems where things change often. It’s like teaching a smart friend a new game with just a few examples.

Few-shot prompting often leads to better results, too. AI can give more accurate answers for specific tasks, which makes it very helpful in fields like medicine, finance, and customer care.

Overall, few-shot prompting is opening new doors for AI. It’s making AI more practical and accessible for many industries.

We’ll likely see AI helping in even more areas of our lives as it grows.

How few-shot prompting works

Unlike zero or one-shot prompting, which provides minimal examples, few-shot prompting uses a small set of example prompts.

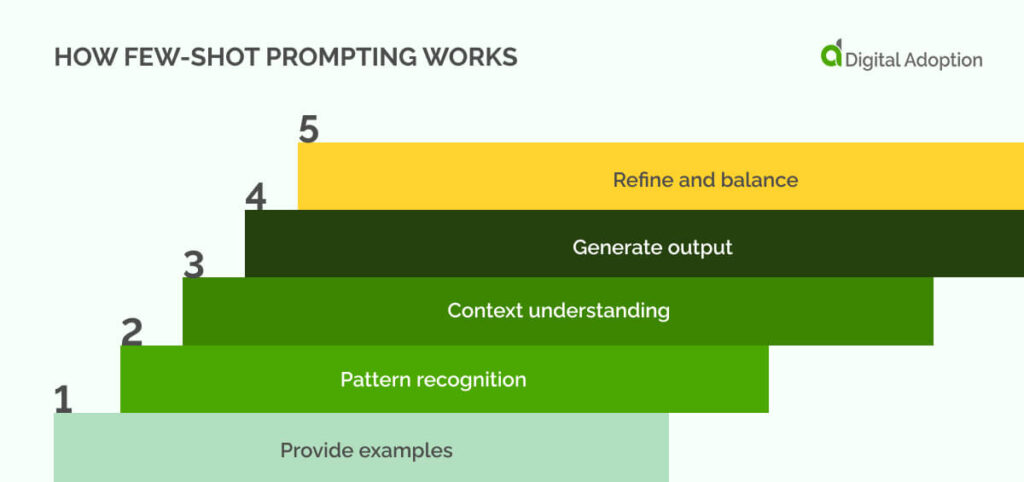

Here’s how few-shot prompting works:

Step 1: Provide examples

The process starts by giving the model 2 to 5 carefully chosen examples. These show the main parts of the task at hand.

Step 2: Pattern recognition

The model examines these examples to spot patterns and find key features important for the task.

Step 3: Context understanding

Using these patterns, the model grasps the context of the task. It doesn’t learn new data but adapts its existing knowledge.

Step 4: Generate output

The model then uses its understanding to create relevant outputs for the new task, applying what it learned from the examples.

Step 5: Refine and balance

This method strikes a balance between being specific and flexible. It allows for more nuanced results compared to other methods.

Applications of few-shot prompting

Few-shot prompting is changing how we use AI in many fields. It’s important to understand where and how it’s used.

This method helps AI learn quickly from just a few examples. These examples show how versatile and powerful it is. They help us see how AI is becoming smarter and more helpful in our daily lives.

From complex thinking to language tasks, few-shot prompting is making a big impact. It’s helping businesses make better choices and solve hard problems and also causing AI to be more human-like in its reasoning.

Looking at these uses, we can better grasp how few-shot prompting is shaping the future of AI. It’s opening new doors for using AI in practical, everyday ways.

Let’s look at some top applications of few-shot prompting.

Classification

Few-shot prompting improves classification tasks. It requires fewer labeled datasets and lets models group data with just a few examples.

This helps in places where new categories often appear. For example, in online shops, few-shot prompting helps group new products quickly, improving inventory management and customer experience. It’s also used in healthcare to sort medical records and helps identify conditions based on limited patient data. This makes processes more efficient in many sectors.

Sentiment analysis

Few-shot prompting improves sentiment analysis. It helps models detect emotions and opinions with limited data.

It’s used in customer feedback analysis and helps understand the tone of reviews. This is crucial for brand management and is used to check public opinion on social media. It allows for better sentiment grouping, even with unique expressions.

This gives more reliable insights into consumer behavior and helps make better marketing decisions.

Language generation

Few-shot prompting is changing language generation. It helps generative AI models produce good, relevant text with few examples.

This is used in content creation and helps make personalized marketing messages. It also helps in customer support and creates good responses to customer questions.

It also supports creative writing tasks and helps generate stories or dialogues, saving time and effort in producing engaging content.

Data extraction

Few-shot prompting transforms data lifecycle management and extraction. It helps models find relevant information from unstructured data and requires minimal training.

This is useful in the finance and legal industries. It can process large amounts of text quickly and accurately. For instance, it can extract key contract terms and pull financial data from reports.

It reduces the need for large labeled datasets, making data extraction more efficient and adaptable and giving faster access to critical information.

What are some examples of few-shot prompting?

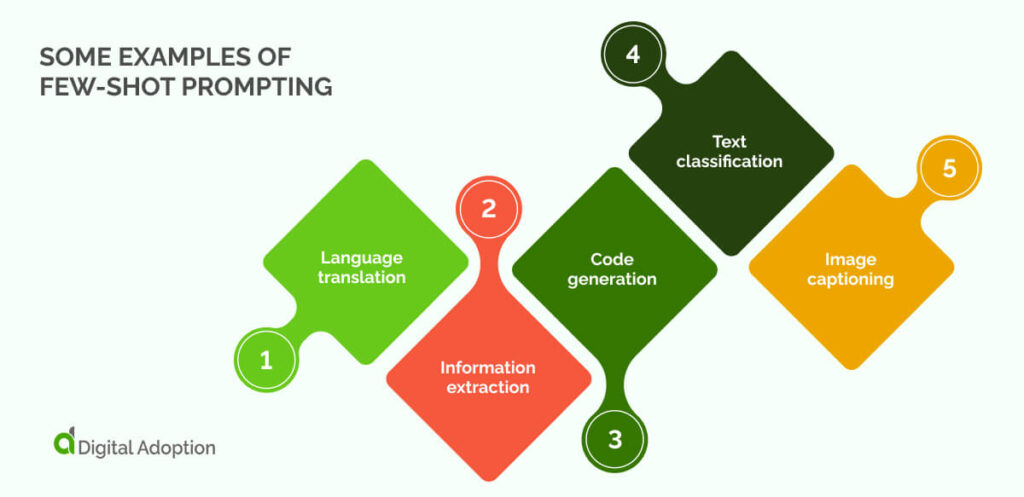

Few-shot prompting helps AI learn new tasks quickly, using just a handful of examples. This makes AI more flexible and useful in many areas.

From translating languages to analyzing data, it’s making a big impact.

These examples show how few-shot prompting is solving real problems. It’s helping businesses work smarter and faster, making AI more accessible for everyday use.

These examples will give you a clear picture of what few-shot prompting can do. They show its power and potential in today’s AI-driven world.

Let’s explore some real-world examples of few-shot prompting in action.

Language translation

AI can now accurately translate languages using just a handful of examples. It learns translation patterns quickly by showing the AI a few sentence pairs. For instance, given “I love AI” and “J’adore l’IA”, it can then translate “She studies robotics” into “Elle étudie la robotique”. This method works well even for less common phrases, making it a game-changer in multilingual communication.

Information extraction

This technique enables AI to pull key details from unstructured text efficiently. Imagine teaching AI to spot dates in emails with just a few samples. After seeing examples like “Meeting scheduled for June 15, 2024“, it can identify dates in new, unseen messages. This proves incredibly useful in fields like law or finance, where precise information extraction is crucial.

Code generation

Few-shot prompting empowers AI to write code snippets based on minimal examples. Show it how to calculate squares in Python, and it can then figure out how to compute cubes. This accelerates coding tasks significantly, making it an invaluable asset for software developers who need to solve similar problems quickly.

Text classification

AI can now categorize text into predefined groups with minimal training. By providing examples, like “Great product!” as positive and “Terrible experience” as negative, the AI learns to classify new reviews accurately. This capability is particularly valuable for efficiently analyzing customer feedback or sorting large volumes of text data.

Image captioning

With just a few examples, AI can generate descriptive captions for images. After seeing a picture labeled “Cat lounging on the sofa,” it can create captions for new photos, such as “Dog chasing frisbee in the park.” This application enhances content engagement in digital marketing and social media, making visual content more accessible and searchable.

Few-shot prompting vs. zero-shot prompting vs. one-shot prompting

There are different ways to guide LLMs in doing tasks.

These include few-shot, zero-shot, and one-shot prompting. Each uses a different number of examples.

Let’s look at the differences.

Few-shot prompting

This gives the model a few examples (usually 2-5) before the task. This improves performance. It helps the model understand the task better while staying efficient.

Few-shot prompting is ideal when you need more accurate and consistent results, the task is complex or nuanced, and you have time to prepare a small set of representative examples.

Zero-shot prompting

This gives the model a task without examples, allowing it to use only its existing knowledge. This works when you need quick, flexible responses.

Zero-shot prompting is useful when you need immediate responses to new, unforeseen tasks, there is no time or resources to create examples, and the task is simple enough for the model to understand without examples.

One-shot prompting

This gives the model one example before the task. It guides the model better than zero-shot but needs little input.

One-shot prompting is effective when you want to provide minimal guidance to the model. The task is relatively straightforward but needs some context if dealing with time or resource constraints.

Each method balances guidance and adaptability differently. The choice depends on the specific task, available resources, and desired outcome.

Building reliable AI with few-shot prompting

Few-shot prompting is changing how we make AI systems. It helps create more reliable and adaptable AI. It bridges the gap between narrow and more flexible AI systems.

This method helps build AI that can do many tasks without lots of retraining. It’s useful when data is limited, or things change quickly. It makes AI more practical for real-world use and can easily adapt to new challenges.

But it’s not perfect. The quality of results depends on good examples and the model’s knowledge. As we improve this technique, we’ll likely see better AI systems. They’ll be more robust and better at understanding what humans want.

The future of AI with few-shot prompting looks promising. It could lead to more intuitive and responsive systems. These systems could handle many tasks with little setup and help more industries use AI effectively.

Improved few-shot prompting could make advanced AI capabilities available to smaller businesses and organizations. These developments could significantly expand AI’s applications and impact across various fields.

People Also Ask

-

What are the advantages of few-shot prompting?Few-shot prompting may struggle with complex tasks needing deep context or specialized knowledge. Its performance depends heavily on the quality of the provided examples. It can be less effective with rare or ambiguous data, leading to inaccuracies. Its usefulness is limited if the model lacks sufficient pre-training.

-

What are the limitations of few-shot prompting?Few-shot prompting can struggle with complex tasks requiring deep context or specialized knowledge. Its performance heavily depends on the quality of the examples provided. It may also falter with rare or ambiguous data, leading to inaccuracies. Additionally, if the model lacks sufficient pre-training, its effectiveness can be limited, especially in highly nuanced scenarios.

-

What is the difference between context learning and few-shot prompting?Context learning adjusts responses based on broader situational awareness using ongoing information. Few-shot prompting uses specific examples to guide task-specific outputs. Both improve model accuracy, but context learning leverages continuous data, while few-shot prompting focuses on minimal, explicit examples for particular tasks.