Seeing how an AI arrives at a conclusion is critical for building trust, governance, and explainability in AI systems.

All the data underpinning AI today becomes relegated if these systems don’t know how to use it. Luckily, machine learning (ML), deep learning, and other AI subsets work as cogs, improving the functions of these solutions.

LLMs, for example, employ natural language understanding (NLU) and natural language processing (NLP) to understand text, contextualize information, and perform actions.

Prompt engineering successfully exploits NLP and NLU principles to develop clever techniques for LLM design.

These techniques teach AI models different approaches to solving problems—which is key to ensuring their reliability and fairness.

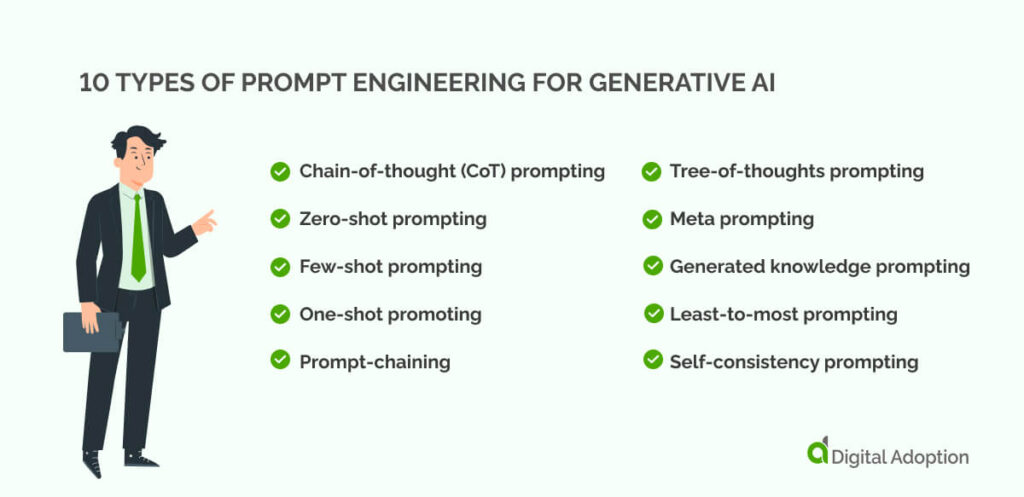

This article will explore different types of prompt engineering techniques to help you better understand how to effectively communicate with and guide AI language models.

- 1.Chain-of-thought (CoT) prompting

- 2. Zero-shot prompting

- 3. Few-shot prompting

- 4. One-shot prompting

- 5. Prompt-chaining

- 6. Tree-of-thoughts prompting

- 7. Meta prompting

- 8. Generated knowledge prompting

- 9. Least-to-most prompting

- 10. Self-consistency prompting

- The role of prompt engineering going forward

- People Also Ask

1.Chain-of-thought (CoT) prompting

Let’s begin with chain-of-thought (CoT) prompting. This method helps to outline and clarify a model’s decision-making process. It allows AI systems to explain their reasoning by observing the steps they follow to complete a task.

With visibility over each step, it can be tweaked and improved. However, CoT typically follows a linear progression, making it harder to backtrack and reconfigure once a step has been confirmed.

CoT prompting works by having the AI address one part of a problem at a time before moving forward. For example, when given a complex task, the model is asked to break it down. First, it outlines the problem, then picks out key information, and finally combines the details to reach a solution.

When done right, CoT prompting helps AI give more accurate and reliable results since each step is verified. This prompt engineering technique is especially useful for knowing how the AI makes decisions.

2. Zero-shot prompting

Zero-shot prompting is a technique that leverages LLMs’ general knowledge capabilities to perform new activities and tasks.

It doesn’t need any examples or prior training, relying only on the model’s pre-existing knowledge to check how accurate its answers are. When fed clear and concise prompts, these models can create relevant responses for tasks they weren’t explicitly trained for.

In sales, a zero-shot prompt may look like: “Draft a cold email introducing our new SaaS product to potential clients.” Without providing any examples, the model uses its existing knowledge to create a compelling email based on the prompt alone.

Zero-shot learning applies what the model already knows to make guesses and sort through new data. It’s vital for testing how well a model works independently.

3. Few-shot prompting

Few-shot prompting is another technique that asks LLMs to complete specific tasks with just a few examples.

Few-shot prompting uses a small set of example prompts, unlike zero or one-shot prompting, which provides none or a single example.

LLMs have around 2 – 5 examples of the most optimal output. Using only pre-existing knowledge and training data, they can then derive what they think is the right answer.

When prompts are well-structured, LLMs can grasp and perform new tasks with just a few examples. This helps reinforce the models’ reasoning processes. When trained on enough examples, the model can begin to generate consistent and reliable outputs.

This type of prompt design technique is especially helpful when dealing with limited amounts of labeled data or when you want to quickly adapt a pre-trained LLM to new objectives.

4. One-shot prompting

One-shot prompting involves providing the model with a single example of how to perform a task. It then prompts the model to perform similar tasks and measures how well a single input produces accurate outputs.

This contrasts with few-shot or zero-shot learning. In one-shot prompting, the model receives one demonstration of the desired input-output pair. This simplified A-B structure serves as a template for following input processes.

The one-shot method leverages the model’s pre-existing knowledge and ability to generalize, allowing it to understand the task’s context and requirements from just one example.

You could present the model with an image of a cat and then ask it to search for and classify other images that also contain cats. The model would use its knowledge and probabilistic ability to classify new images with just the initial reference.

5. Prompt-chaining

Prompt chaining is a way to guide AI through complex tasks by using a series of linked prompts.

As the LLM becomes familiar with user inputs, it starts to hone in on intent and gain a deeper understanding of what is being asked.

Prompt chaining is specifically designed to accomplish this, enabling LLMs to learn, build context, and improve their outputs.

In prompt chaining, a sequence of prompts is created, where each output is used to inform and refine the next. New prompt inputs recycle the previous output, creating a backlog of knowledge the model can draw upon to form new insights.

This process gradually sharpens the model’s reasoning, allowing it to handle more complex tasks and objectives.

The step-by-step nature of prompt chaining provides a more structured and targeted approach than other techniques, such as zero-shot, few-shot, or one-shot methods.

6. Tree-of-thoughts prompting

Tree of thoughts (ToT) prompting helps AI think in many ways at once. It teaches the AI to solve problems more like humans do.

LLM design involves the model generating a single outcome, typically following a linear sequence to generate an output.

When decisions and tasks become more nuanced, getting the model to achieve the most accurate results is difficult.

Tree of thought (ToT) prompting encourages LLMs to explore multiple reasoning paths. It teaches the model to solve problems dynamically, similar to how humans problem-solve.

These paths resemble the structure of a tree, each represented by multiple branches and nodes that explore and refine steps toward a final solution.

The multiple-pronged format of ToT prompting also allows the model to explore potential future outcomes, revise its approach, and revisit previous solution paths. This creates the dynamism LLMs require to perform nuanced objectives.

7. Meta prompting

Meta prompting helps AI understand what we want better. It’s a way to give clear rules to the AI so it can give better answers.

LLMs can more efficiently interpret user queries and generate the intended result by defining the essential components of a desired prompt input.

This technique is called meta prompting. It involves formulating specific guidelines to direct LLMs in delivering focused responses.

This is done by giving the model important details, such as context, examples, and parameters like tone, format, style, or desired actions. These clear instructions help the LLM develop the necessary reasoning to complete complex, incremental processes.

The clearer the instructions we give an AI, the more accurate the answers become. This refines the output through repeated iterations until it consistently meets expectations.

8. Generated knowledge prompting

Generated knowledge prompting evaluates how effectively pre-trained large language models (LLMs) can leverage their existing knowledge base.

It involves reusing the outputs from the vast training data the model has been exposed to and incorporating them into new inputs.

As the model produces information on a given topic, it establishes a logical path to follow. It learns from this generated knowledge and applies it to refine future outputs.

This tests the model’s capacity to expand upon what it already knows, ensuring it can deepen its understanding. When taught what to learn, the model becomes better equipped to handle more dynamic objectives.

A crucial cycle is created that ensures accuracy and depth in model performance. This is needed in enterprise-level solutions, where accuracy and depth of reasoning are non-negotiable.

9. Least-to-most prompting

Least-to-most prompting creates a clear learning path by guiding the model through tasks that grow in complexity.

It starts with simple prompts focused on basic skills. Once the model masters these, more detailed and complex instructions follow, allowing it to build on what it has learned.

This ensures the model understands basic tasks before moving on to harder ones. The model becomes better prepared to handle complex goals requiring deeper reasoning and logic.

A gradual learning curve reduces mistakes and strengthens the model’s ability to manage complicated tasks, even in challenging scenarios.

This technique is key for bridging the gap between simple and complex problem-solving. It allows models to be reconfigured to tackle challenges beyond their original design.

10. Self-consistency prompting

Self-consistency prompting tries to get the best answer by exploring many thinking paths. It asks the same question many times and then picks the answer that comes up most often.

LLMs interpret and contextualize data probabilistically, resulting in diverse and sometimes inconsistent outputs for each new query.

This mirrors how a group of experts can present different viewpoints on the same problem despite sharing similar skills and knowledge.

This technique runs the same prompt multiple times and then aggregates the results to confirm the most consistent output. Reinforcing the right answer establishes a clear path of reasoning the model can follow to effectively problem-solve future queries.

Self-consistency design enhances the probability of generating the right answer by comparing various potential routes. As a result, it improves outputs and elevates the model’s ability to handle more consequential objectives.

The role of prompt engineering going forward

Prompt engineering will be a defining force in developing reliable and trustworthy AI.

Achieving 100% accurate AI outputs tomorrow means fine-tuning outputs and making them more consistent today. Zealous implementation, however, can lead to unexpected results.

Automated prompt engineering (APE) uses AI to create and refine prompts for other AIs. Its machine-learning algorithms can generate, test, and polish prompts independently.

APE could be a game-changer down the road, slashing the time and know-how needed for solid prompt design. This could open the door to LLMs’ untapped potential.

LLM outputs can sometimes lack accuracy and reliability due to several factors. These include biases or gaps in training data, the ambiguity of natural language, and the probabilistic nature of LLMs, which generate the most likely response rather than a definitive answer.

These oversights can escalate into big issues, from spreading fake news to messing up key decisions in critical sectors. As AI becomes more ubiquitous, getting these details right is a big deal. It underscores why we need solid, responsible prompt engineering.

People Also Ask

-

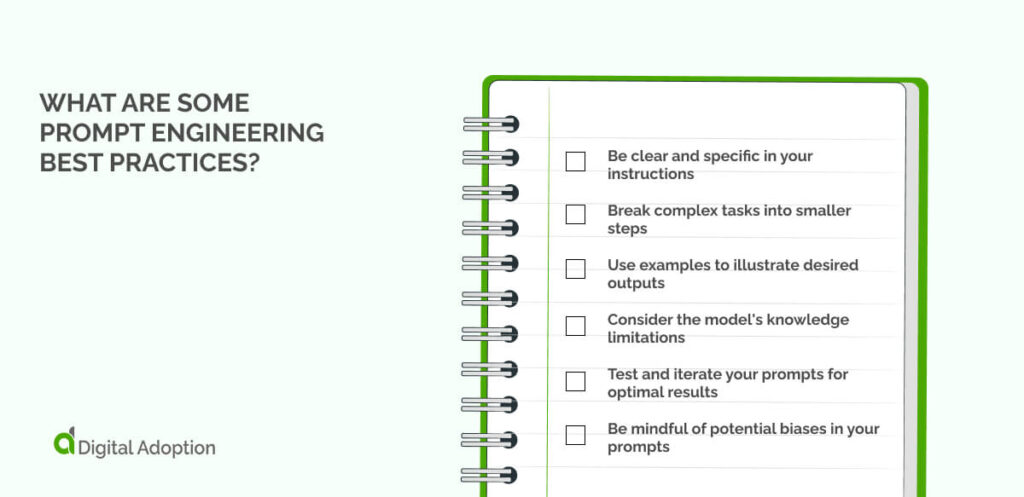

What are some prompt engineering best practices?Effective, prompt engineering relies on clarity, specificity, and keeping the end goal in mind. Here are key practices to remember: 1. Be clear and specific in your instructions 2. Break complex tasks into smaller steps 3. Use examples to illustrate desired outputs 4. Consider the models knowledge limitations 5. Test and iterate your prompts for optimal results 6. Be mindful of potential biases in your prompts

-

Which prompt engineering technique is best?Theres no universal best technique—the most effective approach depends on your task, model, and goals. Most of the methods outlined in this article are popular. Experiment with different techniques and combine them as needed. Start simple and increase complexity slowly, focusing on what produces the most reliable results for your goal.