In the world of AI language models, getting accurate and reliable answers is a key challenge.

As these machine-learning models grow more complex, researchers and developers seek new ways to improve their performance.

One method that has gained attention is self-consistency prompting. This approach offers a unique way to enhance AI outputs, drawing inspiration from how humans think and make decisions.

Analyzing this technique reveals its potential to revolutionize AI interactions and problem-solving.

This article explores self-consistency prompting, how it works, examples, and applications to help you understand this innovative AI technique.

- What is self-consistency prompting?

- How does self-consistency prompting work?

- What are some examples of self-consistency prompting?

- What are some applications of self-consistency prompting?

- Self-consistency prompting vs. tree of thoughts prompting (ToT) vs. chain-of-thought prompting (CoT)

- Self-consistency prompting is key for AI reliability

- People Also Ask

What is self-consistency prompting?

Self-consistency prompting is a prompt engineering technique where large language models (LLMs) generate multiple outputs for a single query. By exploring several reasoning paths, the most consistent and reliable output is confirmed, improving the accuracy of the model’s responses.

Typically, LLM outputs use probability to predict the outcome based on the input, which can result in varied and inconsistent outputs. However, self-consistency prompting increases the chances of finding an accurate answer by comparing multiple generated responses. This approach strengthens the model’s ability to reason through complex tasks.

A study by Polaris Market Research predicts that the prompt engineering market, currently valued at $280 million, will reach $2.5 trillion by 2032. Self-consistency prompting is one of the techniques driving improvements in LLM performance.

How does self-consistency prompting work?

Self-consistency prompting builds on other prompt engineering techniques, such as chain of thought (CoT) and few-shot prompting, to reinforce reasoning in LLM design.

While CoT and few-shot prompting focus on generating accurate outputs through a singular line of reasoning, self-consistency prompting takes this further by exploring multiple reasoning paths for a given problem.

For instance, CoT prompting generates several chains of reasoning, and self-consistency prompting allows the model to compare these independent reasoning paths. This increases the likelihood of arriving at the correct answer and reinforces its validity once the exploration is complete.

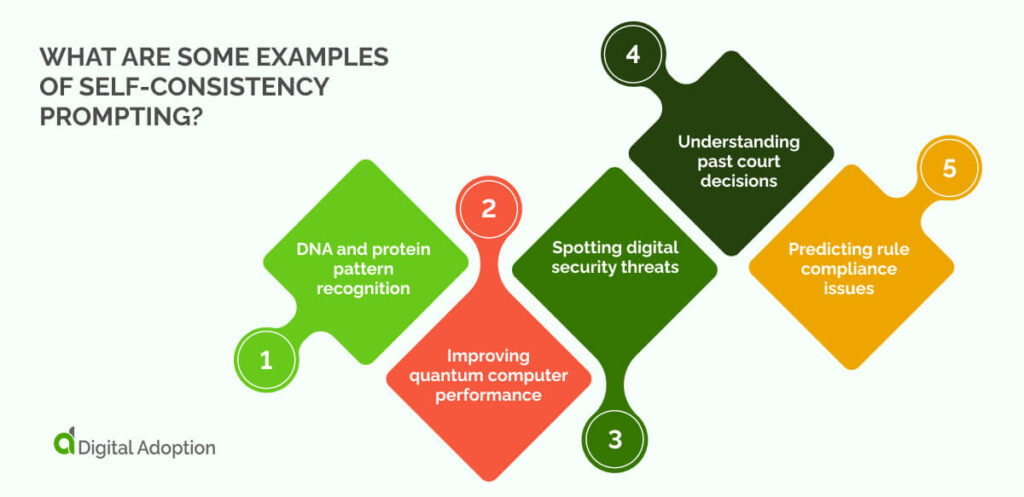

What are some examples of self-consistency prompting?

This section provides practical examples of how self-consistency-promoting methods can be applied across different domains.

Let’s take a closer look:

DNA and protein pattern recognition

Scientists can utilize self-consistent models to find patterns in DNA. They can examine a patient’s genetic information, analyze genetic markers, and identify variations in DNA. This can help indicate susceptibility to certain diseases, inform treatment options, or reveal inherited conditions.

Example: A scientist asks once: “Find disease markers in this DNA sequence.”

The system runs this prompt, and each run might focus on different aspects, like gene activity or DNA structure. The system then finds patterns that show up most often across all runs. The scientist reviews these common patterns to confirm the most likely disease markers.

Improving quantum computer performance

Quantum computing presents a huge learning curve. Trial and error often lead to mistakes. Self-consistency techniques help fix this. They run the same setup query many times to find the best option. This helps stabilize quantum algorithms in future runs.

Example: An expert enters one prompt: “Optimize this quantum circuit for factoring.”

The system runs this prompt repeatedly. Each run may produce different results due to superposition and entanglement. Researchers analyze these differences to adjust qubit states, modify gate operations, or improve error correction. The expert then checks the top-ranked setups to pick the best-performing one.

Spotting digital security threats

Self-consistency methods can boost cybersecurity practices and keep networks safe by running the same threat detection query multiple times.

Example: A security team uses: “Identify threats in this network data.”

The model examines different factors with each run, such as traffic anomalies, login patterns, or unauthorized access attempts. The system compiles these results into a threat map, identifying which risks appear the most. Cybersecurity personnel can then configure additional monitoring for the most prominent threats.

Understanding past court decisions

Lawyers can use self-consistency design to study historic court rulings.

Example: “Analyze historic court rulings on privacy rights, focusing on landmark cases, their societal context, and the impact of technological advancements.“

The model runs the same legal query multiple times, focusing on legal principles, social norms, or tech advancements. After each iteration, the results are compared to highlight key legal trends or changes in interpretation. This process helps enrich legal teams’ understanding of past rulings and anticipate future outcomes in constitutional rights.

Predicting rule compliance issues

Self-consistency models offer a new level of confidence in analyzing company policies. They examine policies from various perspectives, focusing on how employees interpret rules and theorizing different scenarios to assess potential outcomes.

Example: “Evaluate the remote work policy by analyzing interpretations and simulating various employee scenarios.“

This method improves the identification of conflicts between regulations and areas of non-compliance. This increases company oversight, highlighting issues that would have been previously hidden.

Now, companies are primed to proactively address concerns, reduce the risk of costly violations, and ensure adherence to legal requirements.

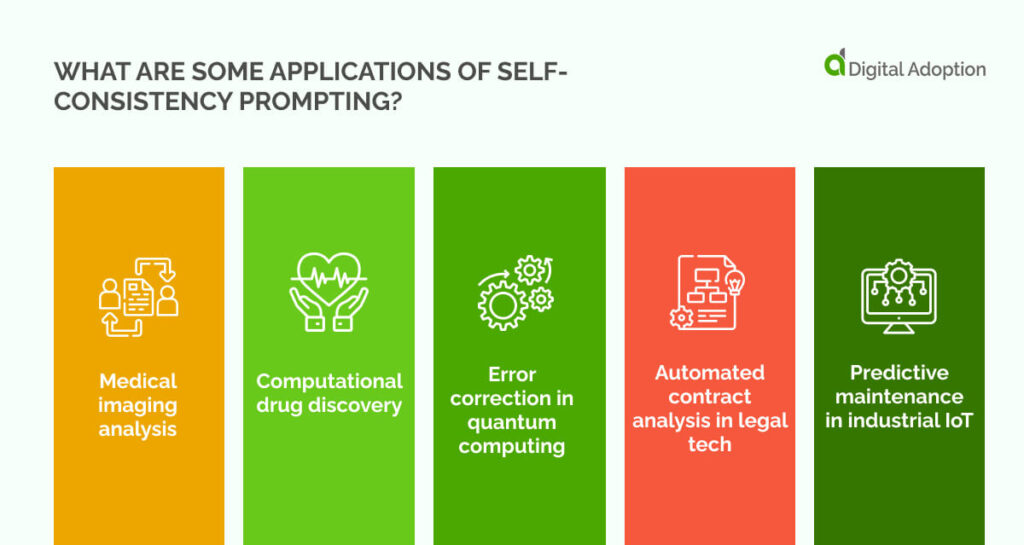

What are some applications of self-consistency prompting?

Now we’ve dissected some examples of crafting effective self-consistency prompts, let’s explore the key areas and use cases where these techniques can be applied.

Medical imaging analysis

X-rays, CT scans, MRIs, and other medical images give doctors better visibility in finding diseases in scans.

Even for trained eyes, spotting future issues and hidden trends is hard. AI offers a more accurate way to detect health problems in medical images. Self-consistent models help examine organ shapes, sizes, and structures. This spots small issues that are hard to see.

These models might eventually make personalized care plans. They could also predict how well a treatment will work based on your scans. This would speed up discovery and change how we treat tough illnesses like cancer.

Computational drug discovery

Scientists are always looking for new medicines. Self-consistent models now help in this search. These models examine many chemicals at once, assessing how they might work as drugs while checking for side effects.

AI can study chemical interactions quicker than humans, meaning it can uncover new drugs that researchers might not be able to.

Future AI could even design gene-tailored drugs, leading to better treatments while mitigating side effects. This would speed up drug discovery and open new paths for treating major diseases like cancer.

Error correction in quantum computing

Quantum computers’ experimental nature shows promise but are prone to errors. Self-consistency-trained LLMs can help fix this.

These models analyze quantum circuits to reduce noise and extend coherence time. LLMs run many simulations, adjusting quantum gates and qubit interactions. They find the most stable setups by comparing results. This helps improve quantum error correction.

For specific algorithms, LLMs suggest custom error-fixing strategies. They help design stronger quantum circuits that work longer, driving new developments in quantum computing and making it more accessible.

Automated contract analysis in legal tech

Self-consistency-trained LLMs offer better contract review for legal firms.

LLMs analyze contracts from many angles, comparing clauses to legal rulings. They find issues and unclear language and suggest fixes. Checking multiple interpretations ensures a more thorough analysis.

Lawyers can also leverage self-consistent models to review contracts faster and more reliably. LLMs help draft stronger agreements by predicting disputes and suggesting protective clauses.

Predictive maintenance in industrial IoT

Self-consistency-trained LLMs analyze factory sensor data to prevent equipment failure.

LLMs process machine data and compare it to past patterns and models. They create and check multiple failure scenarios to find likely outcomes. This leads to accurate predictions.

Factory leads can plan maintenance at the right time. The manufacturing AI also suggests ways to improve machine performance and save energy. These LLMs are part of the AI advances bringing in Industry 4.0.

Self-consistency prompting vs. tree of thoughts prompting (ToT) vs. chain-of-thought prompting (CoT)

Prompt engineering might seem as straightforward as teaching AI how to function, but it’s easy to overlook the distinct methods, each suited to achieve specific outcomes.

Let’s break down a few key techniques. Some work dynamically, handling multiple requests simultaneously, while others follow a more linear progression.

Self-consistency prompting

Self-consistency prompting generates multiple solutions and selects the most consistent one. This usually involves using CoT or few-shot prompting as a baseline for reasoning and is generally used for tasks with many possible solutions. It helps the AI think more like humans, leading to better results.

Tree of Thoughts (ToT) prompting

Tree of Thoughts (ToT) prompting breaks big problems down into a decision tree. The AI explores different paths, going back if needed. This method is great for planning and solving complex problems step by step.

Chain-of-thought (CoT) prompting

Chain-of-thought (CoT) prompting shows how the AI thinks step-by-step, improving transparency in how AI makes decisions. This method works well for logical tasks where you need to see each step of the thinking process.

Each method has its strengths and limitations. Self-consistency is good for tasks with many possible answers. Tree-of-thought prompting helps with planning, while chain-of-thought works well for logical tasks.

Self-consistency-trained LLMs often do better in open-ended tasks. They make diverse answers and check them against each other. This mimics how humans make decisions, leading to more accurate model outputs.

Self-consistency prompting is key for AI reliability

Where careful decisions are crucial, self-consistency prompting techniques can deliver reliability. This could mean discovering new medicine in healthcare or strengthening cybersecurity in the finance sector.

As research continues, we’ll likely see more powerful uses of this technique. It may help create AI systems that can better handle complex, real-world problems.

As long as first-movers actively address and mitigate the material risks AI poses, prompt engineering is making AI-driven solutions more trustworthy and versatile.

People Also Ask

-

How does self-consistency prompting improve AI accuracy?Self-consistency prompting makes AI more accurate by creating many answers to a problem. The AI then compares these answers, finding common parts. This helps remove errors, leading to better results. Its like getting many opinions within the AI itself, using the best parts of each to find the right answer.

-

Can self-consistency prompting be used with any language model or AI system?Self-consistency prompting works best with advanced AI models, which can create many good answers. However, not all AI systems can use this technique. AI needs to provide multiple clear answers to the same question, so simpler AI systems might not benefit as much from this method.

-

Where does self-consistency prompting fall short?Self-consistency prompting isnt perfect for all tasks. It can struggle with problems that need one exact answer, like math problems. It also uses more computer power, as it makes many answers. This could be a problem when speed is important. Also, if the AIs basic knowledge is wrong, it might repeat mistakes in all its answers.