Guiding large language models (LLMs) to generate targeted and accurate outcomes is challenging. Advances in natural language processing (NLP) and natural language understanding (NLU) mean LLMs can accurately perform several tasks if given the right sequence of instructions.

Through carefully tailored prompt inputs, LLMs combine natural language capabilities with a vast pool of pre-existing training data to produce more relevant and refined results.

Least-to-most prompting is a key prompt engineering technique for achieving this. It teaches the model to improve outputs by providing specific instructions, facts, and context. This direction improves the model’s ability to problem-solve complex tasks by breaking them down into smaller sub-steps.

As AI becomes more ubiquitous, honing techniques like least-to-most prompting can fast-track innovation for AI-driven transformation.

This article will explore least-to-most prompting, along with applications and examples to help you better understand core concepts and use cases.

What is least-to-most prompting?

Least-to-most prompting is a prompt engineering technique in which task instructions are introduced gradually, starting with simpler prompts and progressively adding more complexity.

This method helps large language models (LLMs) tackle problems step-by-step, enhancing their reasoning and ensuring more accurate responses, especially for complex tasks.

By building on the knowledge from each previous prompt, the model follows a logical sequence, enhancing understanding and performance. This technique mirrors human learning patterns, allowing AI to handle challenging tasks more effectively.

When combined with other methods like zero-shot, one-shot, and tree of thoughts (ToT) prompting, least-to-most prompting contributes to sustainable and ethical AI development, helping reduce inaccuracies and maintain high-quality outputs.

Why is least-to-most prompting important?

Our interactions with AI increase by the day. Despite doubting skepticism about its long-term impacts, AI adoption is quickly growing and becoming more ingrained in major sects of society.

Least-to-most prompting will be key to advancing AI capabilities and achieving a reliable and sustainable state. Through least-to-most prompt design, organizations can improve the performance and speed of AI systems.

This method’s importance lies in its ability to bridge the gap from more simplified to intricate problem-solving. It enables AI models to address and solve challenges they weren’t specifically programmed to do.

This technique can drive innovation by enabling AI systems to handle sophisticated tasks and objectives. The result? New possibilities for scalable automation and augmenting decision support industry-wide.

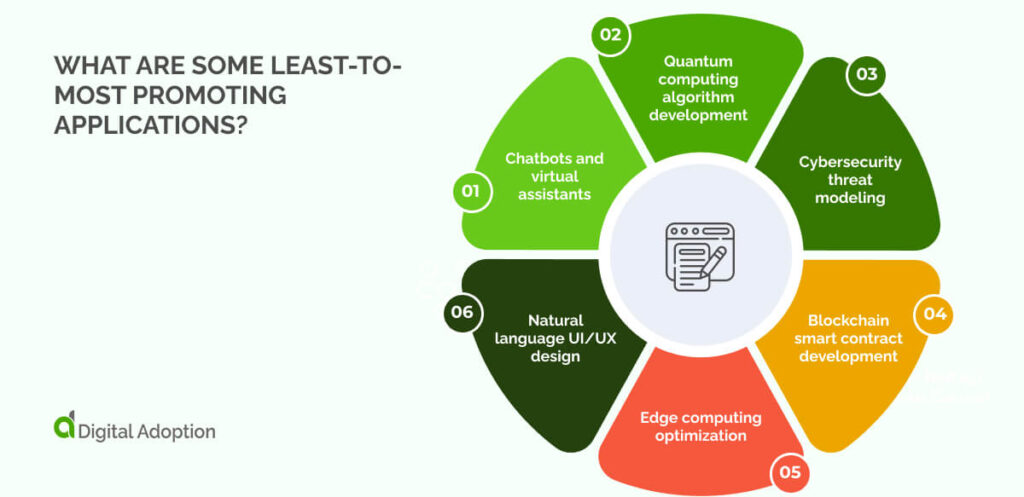

What are some least-to-most promoting applications?

Least-to-most prompting is a versatile approach that enhances problem-solving and development across various technological domains.

These range from user interaction systems to advanced computational fields and security paradigms.

Let’s take a closer look:

Chatbots and virtual assistants

Least-to-most prompting can help chatbots and virtual assistants generate better answers. This method helps engineers design generative chatbots that can talk and interact with users more effectively.

Think about a customer service chatbot. It starts by asking simple questions about what you need. It then probes for more specific issues. This way, the chatbot can hone in on the right information to solve your problem quickly and correctly.

In healthcare, virtual assistants use this method, too. They start by asking patients general health questions. Then, inquire about specific symptoms. This creates a holistic understanding of patient health, enhancing medical professionals’ capabilities.

Quantum computing algorithm development

Least-to-most prompting can contribute to the enigmatic world of quantum computing. Researchers use it to break big problems into smaller, easier parts.

When improving quantum circuits, developers start with simple operations and slowly add more complex parts. This step-by-step method helps them fix errors and improve the algorithm as they go.

This method also helps teach AI models about quantum concepts. The AI can then help design and analyze algorithms. This could speed up new ideas in the field, leading to breakthroughs in code-breaking and new medicinal discoveries.

Cybersecurity threat modeling

In cybersecurity, least-to-most prompting helps security experts train AI systems to spot weak points in security infrastructure. It can also help refine security protocols and mechanisms by systematically finding and assessing risk.

They might start by looking at the basic network layout. Then, they move on to more complex threat scenarios. As the AI learns more, it can mimic tougher attacks. This helps organizations improve their cybersecurity posture.

Least-to-most also makes better tools that can search for weaknesses in systems and apps. These tools slowly make test scenarios harder, improving system responses and fortifying cybersecurity parameters.

Blockchain smart contract development

Least-to-most prompting is very useful for making blockchain smart contracts. It guides developers to create safe, efficient contracts with fewer weak spots.

They start with simple contract structures and slowly add more complex features. This careful approach ensures that developers understand each part of the smart contract before moving on to harder concepts.

This method can also create AI tools that check smart contract codes. These tools learn to find possible problems, starting from simple errors and moving to more subtle security issues.

Edge computing optimization

In edge computing, least-to-most prompting helps manage resources and processing better. It develops smart systems that handle edge devices and their workloads well.

The process might start with recognizing devices and prioritizing tasks. Then, it adds more complex factors like network speed and power use. This step-by-step approach creates advanced edge computing systems that work well in different situations.

Least-to-most prompting can also train AI to predict when edge devices need maintenance. It starts with basic performance measures and slowly adds more complex diagnostic data. These AI models can then accurately predict potential issues and help devices last longer.

Natural language UI/UX design

In natural language UI/UX design, least-to-most prompting helps create easy-to-use interfaces. This approach builds conversational interfaces that adapt to users’ familiarity with the system.

Designers can start with basic voice commands or text inputs. They slowly add more complex interactions as users get better at using the system. This gradual increase in complexity keeps users from feeling overwhelmed, leading to a better user experience.

This method can also develop AI systems that create UI/UX designs based on descriptions. Starting with basic design elements and slowly adding more complex parts, these systems can create user-friendly interfaces that match requests.

Least-to-most prompting examples

This section provides concrete example prompts of least-to-most prompting in action.

Using the previously mentioned application areas as a foundation, each sequence demonstrates the gradual increase in output complexity and specificity.

Chatbots and virtual assistants

1. First prompt: “What can I help you with today?“

This open question finds out what the user needs.

2. User says: “I have a problem with my account.“

3. Next prompt: “I see you have an account problem. Is it about logging in, billing, or account settings?“

Observe how the chatbot narrows down the problem area based on the user’s initial response.

4. User says: “It’s a billing problem.”

5. Detailed prompt: “Thanks for explaining. About your billing issue, have you seen any unexpected charges, problems with how you pay, or issues with your subscription plan?“

With the specific area identified, the chatbot probes for detailed information to diagnose the exact problem.

Quantum computing algorithm development

1. Basic prompt: “Define a single qubit in the computational basis.”

This teaches the basics of quantum bits.

2. Next prompt: “Use a Hadamard gate on the qubit.“

Building on qubit knowledge, this introduces simple quantum operations.

3. Advanced prompt: “Make a quantum circuit for a two-qubit controlled-NOT (CNOT) gate.”

This step combines earlier ideas to build more complex quantum circuits.

4. Expert prompt: “Develop a quantum algorithm for Grover’s search on a 4-qubit system.”

This prompt asks the AI to create a real quantum algorithm using earlier knowledge.

5. Cutting-edge prompt: “Make Shor’s algorithm better to factor the number 15 using the fewest qubits.”

This final step asks for advanced improvements to a complex quantum algorithm.

Cybersecurity threat modeling

1. First prompt: “Name the main parts of a typical e-commerce system.”

This lists the basic components we’ll analyze through a cybersecurity lens.

2. Next prompt: “Map how data flows between these parts, including user actions and payments.”

Building on the component list shows how the system parts work together.

3. Detailed prompt: “Find possible entry points for cyber attacks in this e-commerce system. Look at both network and application weak spots.”

Using the system map, this prompt looks at specific security risks.

4. Advanced prompt: “Develop a threat model for a complex attack targeting the e-commerce platform’s outside connections.”

This step uses previous knowledge to address tricky, multi-part attack scenarios.

5. Expert prompt: “Design a zero-trust system to reduce these threats. Use ideas like least privilege and always checking who users are.”

The final prompt asks the AI to suggest advanced security solutions based on the full threat analysis.

Blockchain smart contract development

1. Basic prompt: “Write a simple Solidity function to move tokens between two addresses.”

This teaches fundamental smart contract actions.

2. Next prompt: “Create a time-locked vault contract where funds are released after a set time.”

Building on basic token moves, this adds time-based logic.

3. Advanced prompt: “Make a multi-signature wallet contract needing approval from 2 out of 3 chosen addresses for transactions.”

This step combines earlier concepts with more complex approval logic.

4. Expert prompt: “Develop a decentralized exchange (DEX) contract with automatic market-making.”

This prompt asks the AI to create a sophisticated DeFi application using earlier knowledge.

5. Cutting-edge prompt: “Make the DEX contract use less gas and work across different blockchains using a bridge protocol.“

This final step asks for advanced improvements and integration of complex blockchain ideas.

Edge computing optimization

1. First prompt: “List the basic parts of an edge computing node.“

This sets up the main elements of edge computing structure.

2. Next prompt: “Create a simple task scheduling system for spreading work across multiple edge nodes.“

Building on the basic structure, this introduces resource management ideas.

3. Detailed prompt: “Develop a data preprocessing system that filters and compresses sensor data before sending it to the cloud.“

This applies edge computing principles to real data handling scenarios.

4. Advanced prompt: “Create an adaptive machine learning model that can update itself on edge devices based on local data patterns.“

Combining previous knowledge, this prompt explores advanced AI abilities in edge environments.

5. Expert prompt: “Design a federated learning system that allows collaborative model training across a network of edge devices while keeping data private.”

The final prompt asks the AI to combine complex machine learning techniques with edge computing limits.

Natural language UI/UX design

1. Basic prompt: “Create a simple voice command system for controlling smart home devices.”

Here, the model learns fundamental voice UI concepts.

2. Next prompt: “Make the voice interface give context-aware responses, considering the time of day and where the user is.”

Building on basic commands, this sets up a more nuanced interaction design.

3. Advanced prompt: “Develop a multi-input interface combining voice, gesture, and touch inputs for a virtual reality environment.”

This helps integrate the model’s multiple input methods to generate more complex interactions.

4. Expert prompt: “Create an adaptive UI that changes its complexity based on user expertise and usage patterns.”

Applying earlier principles, this prompt explores personalized and evolving interfaces.

5. Cutting-edge prompt: “Design a brain-computer interface (BCI) that turns brain signals into UI commands, using machine learning to get more accurate over time.”

Scalable AI: Least-to-most prompting

Prompt engineering methods like zero-shot, few-shot, and least-to-most prompting are becoming key to expanding LLM capabilities.

With more focused LLM outputs, AI can augment countless human tasks. This opens doors for business innovation and value creation.

However, getting reliable and consistent LLM results needs advanced prompting techniques.

Prompt engineers must develop models carefully. Poor AI oversight carries serious risks, and failing to verify responses can lead to false, biased, or misleading outputs.

Least-to-most prompting shows particular promise, heightening our understanding and trust in AI systems.

Remember, prompt engineering isn’t one-size-fits-all. Each use case needs careful thought about its context, goals, and potential risks.

As AI becomes more ubiquitous, we must improve our use of it responsibly and effectively.

Least-to-most prompting exemplifies a scalable AI strategy, empowering models to address progressively challenging problems through structured, incremental reasoning.

People Also Ask

-

What are the limitations of least-to-most prompting?Least-to-most prompting, while effective, has some constraints: It can be time-consuming for complex tasks The method may not always capture nuanced context or implicit knowledge It requires careful design to avoid biased outcomes.

-

What are the advantages of least-to-most prompting?For every limitation, least-to-most prompting also boasts a host of benefits: Enhances AI comprehension of complex tasks by breaking them down Improves output accuracy and relevance through logical progressions Increases transparency in AI decision-making Better error detection at each stage More consistent AI performance across various applications