We often talk about “artificial intelligence” as if it is just one thing. However, artificial intelligence is an umbrella term for a wide range of technologies. AI technologies have the common goal of simulating human intelligence through tasks such as knowledge processing, decision-making, and perception through computer vision.

Researchers use a wide range of artificial intelligence models to achieve those goals. In this article we will explore the following topics:

- What is an artificial intelligence model?

- The ten most popular AI models

- How generative AI models mimic human intelligence

- Use cases for artificial intelligence models in business

- The ethical concerns from different AI models

Artificial intelligence models are solving complex problems every day – but it’s not just Generative AI that’s doing the hard work. Read on to discover how AI models emulate the human brain.

What are artificial intelligence models?

Artificial Intelligence (AI) models are sophisticated algorithms that analyze datasets, detect intricate patterns, and make informed decisions. These programs operate on the principle of machine learning. They review extensive training datasets to enhance their understanding and predictive capabilities.

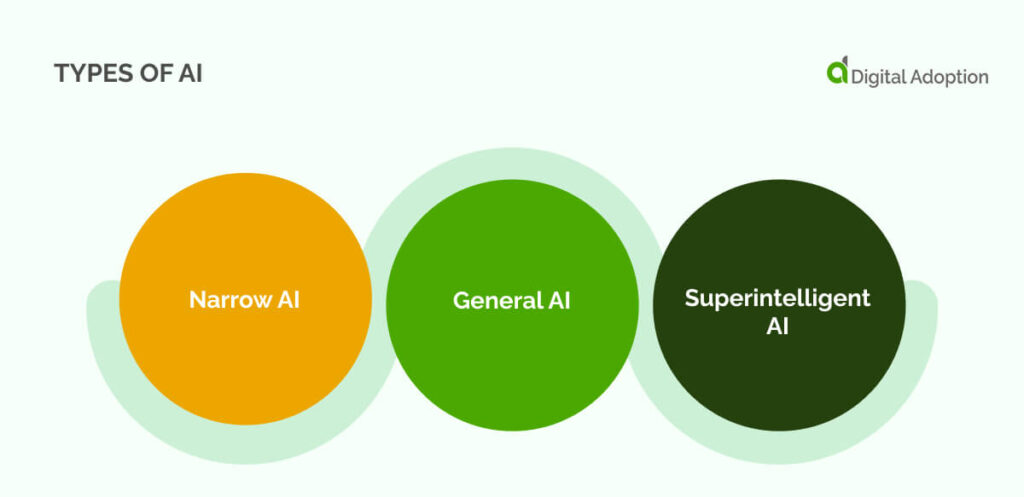

Types of AI

Artificial Intelligence (AI) models constitute a sophisticated realm of algorithms designed to simulate human intelligence. These models fall into distinct categories based on their scope and capabilities.

Narrow AI

Narrow AI models are specialized in performing a specific task or a set of closely related tasks. They excel in well-defined scenarios, such as image recognition, speech processing, or playing board games. These models, including linear regression algorithms, are tailored for specific applications and lack the broad adaptability found in general or superintelligent models.

General AI

General AI, in contrast, aims for broader cognitive abilities, resembling human intelligence across diverse tasks. These models, like Deep Neural Networks (DNNs), possess the capacity to handle complex and non-linear patterns. For example, DNNs are proficient in tasks like image and speech recognition, showcasing a more generalized understanding of information.

Superintelligent AI

Superintelligent AI represents the pinnacle of artificial intelligence, surpassing human intelligence across the board. As of now, true superintelligent AI remains theoretical, with ongoing research exploring the potential and ethical implications. The field is characterized by models that can outperform humans in nearly every economically valuable work.

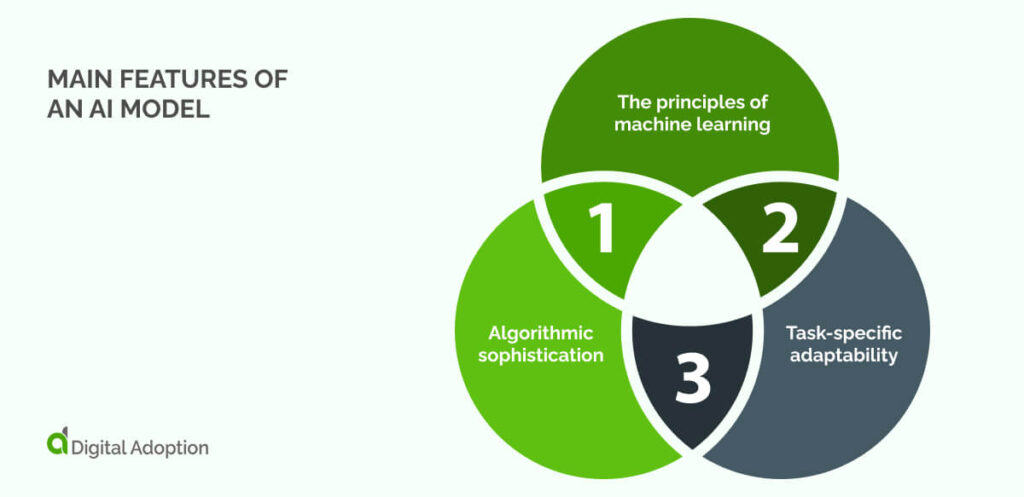

Main Features of an AI Model

AI models have several features that define their functionality and effectiveness.

Algorithmic sophistication

AI models are built on advanced algorithms that analyze datasets with precision. These algorithms detect intricate patterns within the data, enabling the model to make informed decisions.

The principles of machine learning

The core principle guiding AI models is machine learning. These models undergo training on extensive datasets, progressively enhancing their understanding and predictive capabilities over time.

Task-specific adaptability

Each AI model is tailored for specific tasks, showcasing adaptability in addressing well-defined scenarios. For example, Deep Neural Networks are adept at handling complex patterns, making them suitable for image and speech recognition tasks.

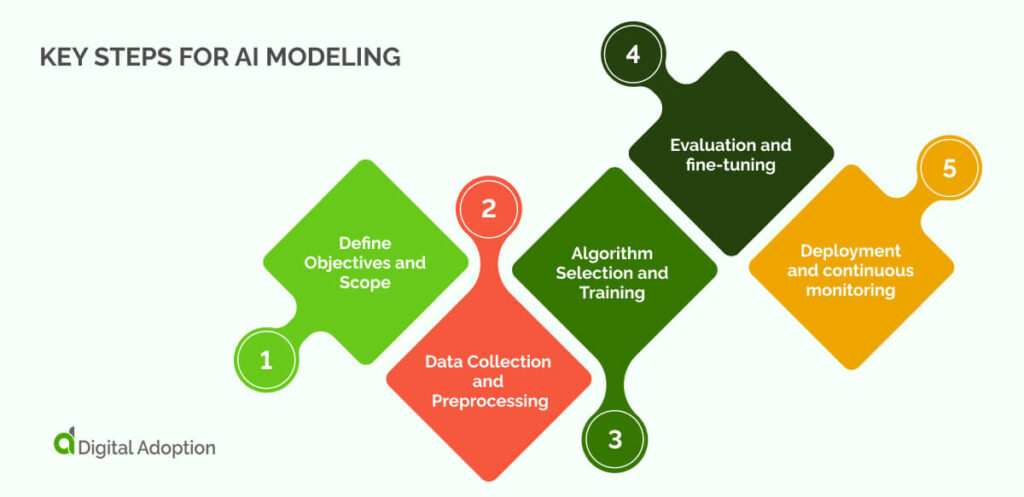

Key Steps for AI Modeling

Creating effective AI models involves a series of key steps that shape their development and deployment.

Define Objectives and Scope

The initial step in AI modeling is clearly defining the objectives and scope of the model. Understanding the specific task or tasks the model will address is crucial for successful implementation.

Data Collection and Preprocessing

AI models rely on data for training and decision-making. Collecting relevant datasets and preprocessing them to ensure quality and consistency are essential steps in the modeling process.

Algorithm Selection and Training

Choosing the appropriate algorithm based on the defined objectives is a critical decision. The selected algorithm undergoes training on the prepared datasets to learn patterns and relationships.

Evaluation and fine-tuning

After training, the model is evaluated for its performance against predefined metrics. Fine-tuning, based on evaluation results, ensures the model achieves optimal accuracy and efficiency.

Deployment and continuous monitoring

Once validated, the AI model is deployed for practical use. Continuous monitoring is essential to ensure its ongoing effectiveness and to address any evolving challenges or changes in the environment.

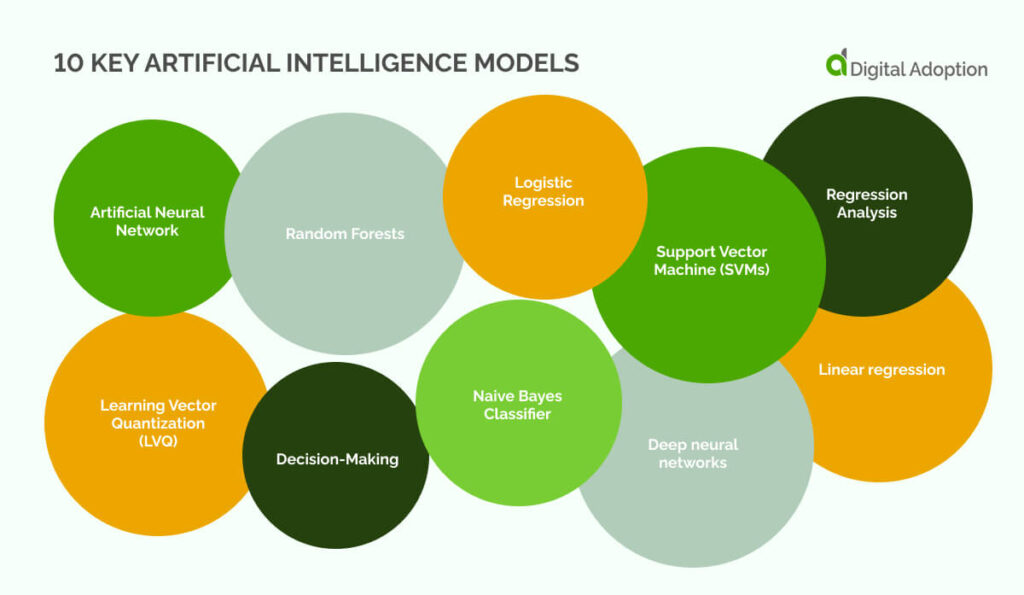

10 key artificial intelligence models

Today, AI is not just a scientific curiosity but a practical reality, with various models being part of our everyday lives. In this section, we delve into the different types of AI models, exploring each one to understand how they’ve shaped the landscape of AI as we know it.

Artificial Neural Network

Artificial Neural Networks (ANNs) are computing systems inspired by the human brain’s structure and function. They comprise interconnected nodes, or artificial neurons, organized in layers. Information passes through these layers, and the network learns to recognize patterns and relationships within the data, making ANNs effective for tasks like image and speech recognition.

Neural networks have been one of the most important AI models in the past few years. Back in 2021, Harvard’s AI100 study called them one of the most important advances in artificial intelligence. But most people know artificial neural networks because they are the foundation of the large language models (LLMs) that power generative AI.

Decision-Making

Decision-making models in AI focus on making choices based on input data and predefined criteria. These models are used in scenarios where the system needs to take actions or make decisions, often in complex and dynamic environments. Decision-making models can range from rule-based systems to more sophisticated approaches, like reinforcement learning.

Learning Vector Quantization (LVQ)

Learning Vector Quantization is a type of machine learning model that belongs to the family of neural networks. It’s commonly used for classification tasks. LVQ organizes input data into predefined classes by adjusting prototype vectors. During training, the model adjusts these prototypes to better represent the characteristics of the input data.

Random Forests

Random forests, or random decision forests, is an ensemble learning method that builds multiple decision trees during training and outputs the mode of the classes (classification) or the mean prediction (regression) of the individual trees. It is known for its robustness and accuracy, making it suitable for various tasks, including classification and regression.

Logistic Regression

Logistic Regression is a statistical method used for binary classification. Despite its name, it is employed for classification rather than regression. The model calculates the probability of an instance belonging to a particular class and makes predictions based on a predefined threshold. Logistic regression is widely used for its simplicity and interpretability.

Support Vector Machine (SVMs)

Support Vector Machines (SVMs) are supervised learning models that analyze and classify data for regression and classification tasks. SVMs work by finding a hyperplane in a high-dimensional space that best separates data points into different classes. They are effective in scenarios with complex decision boundaries and are versatile in various domains.

Naive Bayes Classifier

Naive Bayes is a probabilistic classification algorithm based on Bayes’ theorem. It assumes that features are independent, hence the term “naive.” Despite its simplicity, Naive Bayes is powerful in text classification and spam filtering, among other applications. It calculates the probability of a data point belonging to a particular class.

Regression Analysis

Regression analysis is a statistical method used to model the relationship between a dependent variable and one or more independent variables. In the context of AI, regression models predict a continuous outcome. These models help understand and quantify the relationship between variables, making them valuable for tasks such as predicting sales, stock prices, or other numerical values.

Linear regression

Linear Regression is a fundamental statistical method used for modeling the relationship between a dependent variable and one or more independent variables. It predicts a continuous outcome by fitting a linear equation to the observed data.

Deep neural networks

Deep Neural Networks (DNNs), a subset of artificial neural networks, consist of multiple layers. These layers enable the model to automatically learn hierarchical representations of data, proving successful in natural language processing, image recognition, and speech recognition.

What’s special about generative AI models?

In 2024, much of the hype about Artificial Intelligence is driven by popular Generative AI. As such, it is worth taking a moment to understand how generative AI is distinct from other AI models.

Generative AI models stand out from traditional AI. Generative AI does not just analyze and interpret old data. As we now all know, generative AI models can create “new” material – whether that’s text, graphics, code, or even video.

Generative AI models, like Generative Adversarial Networks (GANs), are trained through a process of competition between two parts: a generator and a discriminator.

- Generator: It starts by creating something, like an image or text, from random noise.

- Discriminator: This part tries to tell if what the generator made is real or fake.

- Competition: They keep going back and forth. The generator tries to get better at making things that look real, and the discriminator gets better at telling the difference.

- Learning: Over time, the generator learns to create things that are so good, the discriminator can’t easily tell if they’re real or fake.

- Result: In the end, you have a generator that’s pretty good at making new and realistic stuff, whether it’s images, text, or something else.

In short, the AI learns to generate things by getting better at fooling another part of itself into thinking what it made is the real deal.

Business use cases for artificial intelligence models

AI models are exciting stuff. However, it’s their business capabilities that make them most interesting. AI models have a long and distinguished relationship with researchers in computer science. Here are some key examples of business use cases for AI.

Business use of random forest regression: credit scoring in financial services

In recent years, financial institutions have developed strong programs of digital transformation. Their application of AI models has been very effective.

In particular, random forest models are robust for credit-scoring applications. By considering various financial factors, these models assess creditworthiness, providing financial institutions with a reliable tool for making informed decisions on loan approvals and managing credit risk.

Business use of regression analysis: sales forecasting in retail

Regression analysis is instrumental in predicting numerical outcomes, making it ideal for sales forecasting in the retail sector. By analyzing historical sales data and considering factors like promotions and seasonality, businesses can make informed decisions regarding inventory management and resource allocation.

As Biswas, Sanyal, and Mukherjee demonstrated in 2020, regression analysis is now being updated with more sophisticated techniques. However, it remains a core element of every AI researcher’s knowledge base.

Business use of logistic regression: predicting employee attrition

Human beings seem to behave in unpredictable and spontaneous ways. However, investigating huge datasets of employee behavior can reveal striking patterns.

For example, logistic regression models have a useful role in predicting the future of human resources departments. Based on factors such as job satisfaction, tenure, and performance metrics. This aids in proactive workforce management and retention strategies.

It’s not as exciting as ChatGPT. Logistic regression nonetheless has a valuable role in your organization’s human resources strategy.

Ethical concerns of artificial intelligence models

Many commentators have raised questions about the ethics and morality of AI. Although there are many general problems with the ethics of AI, each model brings up its own specific questions. Consider these carefully before planning your implementation.

Generative AI Models

ChatGPT and similar models actively perpetuate societal biases ingrained in their training data, potentially endorsing stereotypes or discriminatory language. The realistic content generation facilitated by these models raises consequential concerns about the creation of convincing fake news, deepfakes, or misleading information.

Artificial Neural Networks (ANNs)

The complexity of ANNs actively contributes to decision-making processes characterized by opaqueness, hindering a clear understanding and explanation of specific outputs. If training data incorporates biases, ANNs actively amplify and perpetuate these biases in their predictions, necessitating vigilant consideration during model development.

Decision-Making Models

The utilization of decision-making models in sensitive areas like criminal justice actively raises concerns about fairness, potential biases, and the impact on individuals’ lives. Transparent and accountable decision-making processes actively prevent unintended consequences and foster fair outcomes within these models.

Random Forests

While actively recognized for robustness, concerns may arise if random forests inadvertently reinforce biases present in the training data, potentially compromising the fairness of decision outcomes. Attentive consideration of these ethical implications is essential in actively developing and deploying random forest models.

Support Vector Machines (SVMs)

SVMs, especially in scenarios with complex decision boundaries, may inadvertently contribute to biased outcomes, actively prompting concerns about fairness and equity. Vigilant attention to addressing potential biases and ensuring fairness is essential in actively deploying SVMs across various domains.

The future of artificial intelligence models

For decades, data scientists have been diligently working on AI models for all kinds of applications that simulate human intelligence in key ways.

As we’ve seen in this article, the major issues of the day are not the terrors of self-aware AI or artificial general intelligence. Rather, we are still working out what to do with the models we DO have!

For example, at the UK’s AI summit in November 2023, the international delegation decided that At the 2023 summit on AI – the global delegation seemed to agree that one of the short-term problems was to do with disinformation – a major issue when it comes to the major models of generative AI we have today!

For the moment, researchers will continue with their AI modeling work. In another year, the world may have got even further with their models.