The potential of AI has left the realm of sci-fi cinema and continues to impact the real world.

AI-driven chatbots like ChatGPT and Google Gemini have seen user bases reach millions worldwide. Machine learning (ML) and natural language understanding (NLU) enable these tools to have fluid, intuitive conversations capable of handling complex demands.

However, the numerous risks of AI create an unexamined grey area of its ethical implications. Major AI concerns extend to data privacy and protection, algorithmic biases, and discrimination. AI can hallucinate, generate misinformation, and make inaccurate decisions.

This is where AI ethics comes into play. AI ethics encompasses diverse lines of research and initiatives. These efforts assess the ethical implications of artificial intelligence’s (AI) role in society.

This article explores AI ethics and the importance of guiding AI innovation responsibility. We discuss AI ethics, examine the key principles, and outline the top ethical concerns. We also share insights into organizations that help ensure AI remains a win-win for all.

What are AI ethics?

The diverse field of research considers the complex social and moral implications of artificial intelligence (AI) and combines them to create AI ethics. These include efforts to understand, measure, and mitigate AI’s current and future negative impacts.

With a few carefully crafted sentences, AI can create artistic illustrations in seconds. It’s automating tasks and helping business executives make more targeted decisions.

For example, it can now detect manufacturing flaws and demonstrate expert disease diagnoses in healthcare.

With all the potential value AI promises, it’s also essential to be aware of its material concerns.

How do AI systems collect, store, and use personally identifiable information (PII)?

Unfortunately, biases within society imprint on the data used to train AI. This potentially leads to unfair and imbalanced outputs.

Even when factually inaccurate, AI’s ability to generate convincingly authentic outputs is raising eyebrows. Flawed decision-making by AI systems can also have serious consequences.

Confidence in these capabilities without watertight testing can lead to irreparable harm.

These risks can translate into real-world problems. These include regulatory violations, reputational damage, and even broader ethical missteps that negatively impact society.

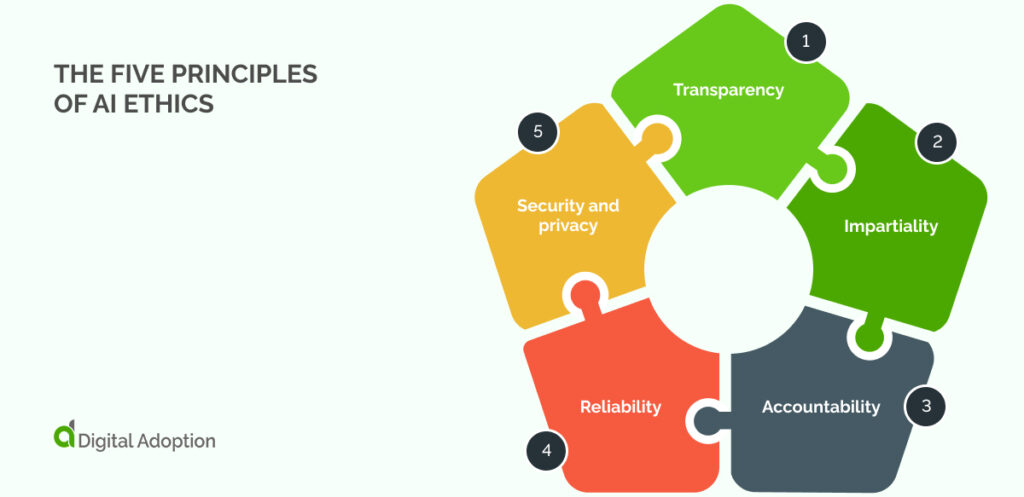

What are the five principles of AI ethics?

Guiding AI’s evolution in modern times is proving to be somewhat unwieldy.

The dynamic and progressive nature of AI development often sees excitement for its innovation overshadow the negative potential.

US-based company Clearview developed a facial recognition system. Its massive database included images scraped from social media without user consent.

This practice raised serious privacy concerns and resulted in hefty fines from regulators to the tune of £7.5million.

The following five core principles aim to ease the ethical design, deployment, and oversight of AI:

- Transparency

The potential of AI’s application across many business sectors means it must be developed transparently. The algorithms must be trained on verifiable data that is explainable to relevant stakeholders.

- Impartiality

AI systems should be developed with neutrality embedded. This includes flagging biases present in the data they’re trained on. It ensures fair, equal, and unbiased treatment for all users, generating trust and confidence in AI solutions.

UNESCO considers “Respect, protection, and promotion of human rights and fundamental freedoms and human dignity” to be core to AI ethical principles.

- Accountability

The high stakes of AI’s use necessitate a clear and defined chain of responsibility. This encompasses the entire lifecycle. From initial design and development through launch and ongoing operation. Establishing clear ownership at each stage ensures accountability and fast-tracks issue resolution.

- Reliability

No matter the scope of AI promises, its technology is majorly limited if it can’t be relied on to perform consistently. AI-driven stock trading systems could occasionally make erratic decisions.

If not preempted, anomalies in decision capabilities could lead to substantial losses. AI may misinterpret market signals or recommend a large buy order on a stock only to experience a sudden and unexpected downturn. The consequences could be irreversible.

- Security and privacy

Security and privacy are core pillars of AI ethics. Strong security safeguards are essential to prevent breaches of sensitive data used by AI systems. For example, a data breach could expose patients’ private information if a medical diagnosis tool were hacked.

The ability to inadvertently collect users’ data makes privacy a top AI risk. Clear data collection practices and anonymization techniques help mitigate breaches of personal data. Scenarios like unsanctioned facial recognition could spark surveillance concerns.

What are the top AI ethics concerns?

Artificial intelligence (AI) is fast-tracking industry capabilities. However, its potential is not without key ethical considerations.

With this in mind, let’s look closer at some of the most pressing concerns.

Data privacy, protection, and security

Data is the lifeblood of AI, making its security integral for healthy AI systems. AI relies on huge pools of data to operate. This means it can potentially store personally identifiable information (PII) inferred by users.

Concerns naturally arise around unauthorized access, misuse of personal information, and the lack of transparency around how data is used and stored.

The European Union’s General Data Protection Regulation (GDPR) is a global example of privacy and protection legislation. It mandates companies to handle user data lawfully. This is particularly true for residents within the European Economic Area (EEA).

As AI systems rely heavily on data to function. The GDPR’s principles of transparency, accountability, and individual control over data become even more important. The more recent EU AI Act complements the GDPR by establishing the first major law regulating the use of AI across Europe.

Bias and discrimination

Social media algorithms, facial recognition software, and HR recruiting software, all empowered by AI, have displayed instances of bias and discrimination.

AI algorithms can inherit and amplify societal biases in the data they’re trained on. This can lead to discriminatory outcomes in areas like loan approvals, job applications, or criminal justice. This can perpetuate social inequalities.

To apply in real-world scenarios going forward, systems with biases and discrimination baked in have to be addressed.

However well-intentioned AI’s aim may be, ethical concerns could arise if it is naive about how it is trained—and on what data.

But humans inevitably provide the training data. These are real people with highly nuanced and complex outlooks on the world. AI systems must be developed with additional mechanisms built in to mitigate bias or narrow outputs.

Accountability

Who is accountable if an AI-powered system makes a harmful error? The developers, the users, or the algorithm itself?

Creating accountability becomes a huge challenge when AI makes misplaced decisions. Critical errors in AI-driven supply chain decision-making could disrupt food supplies. Strategic military operations relying on flawed AI analysis could have devastating consequences.

High-stakes AI demands zero error. The responsibility mostly falls on developers. These entities are under pressure to design balanced systems with rigorous testing and error handling. Organizations deploying AI need vigilant users equipped through training.

This helps them identify biases, ensure proper use, and oversee outputs. Governments and legislators also play a clear role. They must establish legislation and compliance measures to ensure appropriate AI accountability.

Generative and foundation models

Large foundation models and other generative AI systems can create incredibly realistic text, code, and images.

The nonspecific nature and wide scope of foundational and generative AI can be leveraged for a number of activities. However, this high-risk area of foundational AI presents ethical concerns when used across diverse industries.

The potential for misuse, such as creating deepfakes, manipulating information, and cyber threats, continues to be a concern. However, the democratization of foundational and generative models amplifies these risks tenfold.

Workforce redundancies

AI has proven exceptionally adept at automated task completion and decision-making. This has put job displacement at the forefront of AI ethical concerns.

For the percentage of the global workforce whose jobs are affected or replaced by AI, reskilling, upskilling, and AI-centred opportunities must be created.

This ensures the move towards AI is without bumps that could lead to societal implications for global workers.

Sustainability and environmental concerns

AI’s adaptability has thrust job displacement to the forefront of ethical concerns. However, the ethical landscape extends beyond workforce disruption.

The vast computational resources needed to train and run complex AI models can have a significant carbon footprint. The data storage required to house highly complex AI systems around the clock is immense.

Data centers, the resources poured into R&D, and the physical hardware of our increasingly AI-powered world all contribute to this environmental impact.

Preemptive actions prioritizing sustainability are key to ensuring AI’s positive impact isn’t overshadowed by its environmental cost.

Cybersecurity

As AI evolves, so do the tactics of threat actors and cybercriminals.

Hackers could exploit vulnerabilities in AI systems to manipulate outcomes, steal sensitive data, or disrupt critical infrastructure.

A McKinsey survey reveals only 18% of respondents have enterprise-wide councils for responsible AI. Only a third of respondents believe technical staff need skills to identify and mitigate risks associated with advanced AI.

The vast amounts of data empowering AI algorithms can also empower threat systems equally as powerful. AI is a double-edged sword. It can be trained for both beneficial and malicious purposes. For example, AI can launch personalized phishing attacks, detect system vulnerabilities, and automate large-scale attacks simultaneously.

AI’s growing ubiquity also increases the attack surface for cybercriminals. It acts as a catalyst and entry point into secure infrastructures. Concrete cybersecurity measures are needed to protect against these risks.

What organizations promote AI ethics

Artificial intelligence (AI) is going nowhere soon. It continues to become a part of our lives, with tools for boosting productivity and transforming industries emerging daily.

Ensuring AI is built, deployed, and managed ethically and responsibly is key. This section delves into the critical role of organizations championing ethical AI. We’ll explore the work of key players actively shaping a future where AI serves society for the good.

Partnership on AI (PAI)

Partners hip on AI (PAI) is a global initiative that bridges the gap between governments. It works with technology companies (like Microsoft and Google) and civil society organizations to tackle critical issues like algorithmic bias.

For example, their “Eyes Off My Data” framework helps identify and mitigate biases in AI datasets. It helps ensure fairer outcomes across diverse populations.

The Algorithmic Justice League (AJL)

The Algorithmic Justice League (AJL) is a Massachusetts-based non-profit focusing on the public interest in algorithmic fairness and the harmful impacts of tech.

AJL conducts in-depth research to uncover and expose potential biases within AI systems. These are used in criminal justice, hiring processes, or loan approvals.

These efforts analyze how AI impacts public discussions and government collaboration. Based on this analysis, they advocate for policy changes and regulations to ensure algorithms are used fairly. This helps mitigate potential societal risks like bias.

The Future of Life Institute (FLI)

The Future of Life Institute (FLI) aligns technological advancements with human well-being. This non-profit conducts research that explores AI risks and concerns. These include superintelligence and autonomous weapons.

FLI is seeking research proposals to evaluate the impact of Artificial Intelligence (AI) on key Sustainable Development Goals (SDGs) like poverty, healthcare, and climate change. This research will assess both AI’s current and anticipated impacts on these crucial global challenges.

The Ethics and Governance of Artificial Intelligence Initiative (EGAI)

A joint project by MIT Media Lab and the Harvard Berkman-Klein Center for Internet and Society. The Ethics and Governance of Artificial Intelligence Initiative (EGAI) seeks to “ensure that technologies of automation and machine learning are researched, developed, and deployed in a way which vindicates social values of fairness, human autonomy, and justice.”

This philanthropic organization focuses on developing research, prototyping, and advocacy. It aims to be among the most impactful, near-term arenas of automation and machine learning deployment.

Presently, EGAI efforts are directed towards three main domains. These are AI and Justice, Information Quality and Autonomy and Interaction.

These domains cover fair and legal frameworks for AI in public services. They measure AI’s impact on public discourse and collaboration on governance. They assess AI’s impact on public discourse and collaboration in governance.

The Association for the Advancement of Artificial Intelligence (AAAI)

This international-spanning society for AI research has championed responsible development since 1979. Through its guidelines and publications, The Association for the Advancement of Artificial Intelligence (AAAI) encourages researchers to consider the ethical implications of their work from the outset.

Their “Code of Professional Ethics and Conduct” outlines key principles like fairness, accountability, and transparency and serves as the foundation for ethical decision-making.

The code is designed to guide and inspire “current and aspiring practitioners, instructors, students, and influencers” to use AI tech in an impactful way.

How to ensure ethical AI practices

The sure way to remain ethical about AI is to teach yourself how to handle adoption shifts.

Let’s explore the best practices for ensuring AI remains a driver of innovation, not risk.

Prioritizing fairness and transparency

Fairness and transparency are at the core of ethical AI. This involves identifying and mitigating potential biases in training datasets.

Techniques like fairness audits and bias detection algorithms can help uncover skewed data that might lead to unethical outcomes. AI models need to be designed to explain their reasoning processes.

Users gain a deeper understanding of how AI arrives at decisions when AI models are developed to explain their reasoning processes.

Design & development with humans in mind

Human-centered design principles ensure AI development focuses on user needs and societal well-being. This necessitates ongoing collaboration between AI developers, ethicists, and stakeholders from the target population.

User testing throughout the development process helps identify potential biases. It ensures AI systems are designed for inclusivity and accessibility.

Furthermore, integrating human oversight mechanisms allows for human intervention in critical situations. This helps maintain control and mitigate potential risks.

Protecting security and privacy

The incredible amount of data required for AI training requires strengthening security parameters. Implementing encryption techniques and access controls protects sensitive data from unauthorized access or manipulation.

Privacy considerations are equally important. Privacy-enhancing technologies like differential privacy can help ensure data used for training cannot be linked back to individuals. This protects user privacy while enabling the ethical development of AI.

Ongoing assessment and evaluation

Ethical AI is an ongoing process with no discernible end-point. Regular monitoring and evaluation are crucial for identifying potential issues after deploying AI systems. Metrics for fairness, transparency, and societal impact should be established and monitored.

Methods for users to provide feedback also allow for ongoing iterations and adaptations of AI systems. This ensures they remain aligned with ethical principles and that AI serves its intended purpose responsibly.

The future of ethical AI

The future of AI is bright, but its ethical development is crucial. As AI becomes more ingrained in society, we must ensure its creation and use adhere to ethical principles.

This requires collaboration between researchers, developers, policymakers, and the public. Key challenges include transparency in AI decision-making, mitigating biases in training data, and establishing clear lines of accountability.

Protecting sensitive data requires robust security measures and privacy-enhancing technologies. Human-centered design that prioritizes user needs and well-being is paramount.

Developing ethical frameworks and regulations for AI use is crucial. Addressing these challenges helps us build a future where AI serves as a tool for good, amplifying human capabilities and driving innovation across various sectors.

Ethical considerations must be embedded throughout the AI lifecycle, from its development to deployment and ongoing evaluation. Researchers must consider the ethical implications of their work from the start.

Developers should design AI systems that are transparent, fair, and accountable. Policymakers have a role in developing frameworks for ethical AI development and use.

The public needs to stay informed and engaged in the conversation about ethical AI. Ethical AI is a continuous journey, not a destination. By working together, we can ensure that AI serves humanity for the betterment of all.