Generative AI is undoubtedly transforming business and technology, presenting opportunities for efficiency and innovation that are capturing the attention of business leaders globally.

Yet, caution is warranted. The excitement around AI’s potential must be balanced with a thorough understanding of associated risks, particularly in AI risk management and regulatory compliance.

This is precisely where an AI Acceptable Use Policy (AI AUP) becomes essential.

Alongside other risk management strategies, an AI AUP supports long-term stability for generative AI in your organization.

This article will provide a comprehensive overview that:

- Explains the different meanings of an AI Acceptable Use Policy

- Examines the key characteristics of any AI Acceptable Use Policy

- Gives an overview of the businesses that will need an AI Acceptable Use Policy

- Points out some further reading about AI ethics.

Generative AI makes us stop and ask many new questions about how we deploy business IT. A usage policy is just one of the key steps you need to take to make sure that AI does you more good than harm.

What is an AI Acceptable Use Policy?

An AI Acceptable Use Policy (AI AUP) is a set of guidelines that outline the responsible and ethical use of artificial intelligence within a specific context.

Typically an AI AUP addresses issues such as data privacy, transparency, fairness, and compliance with relevant laws and regulations.

The policy supports best practices in AI, ensuring that the organizational use aligns with ethical and legal standards.

Introducing an AI use policy is a guiding framework for many of the risks associated with AI.

A policy safeguards sensitive data, promotes transparency, and mitigates biases in AI decision-making. It also assigns accountability and responsibility, fostering trust among stakeholders.

Organizations with an AI AUP protect themselves from potential legal and reputational risks and demonstrate their commitment to using AI for the benefit of the organization, its stakeholders, and society at large.

The key characteristics of an AI Acceptable Use Policy

Each business has the power to decide on what “acceptable use” means for them.

Your uses of technology, customer needs, and staff experience may all make a difference to the type of responsible AI use you expect.

So, what will be part of your AI acceptable use policy? Some of the key aspects are likely to be:

- Compliance with Laws and Regulations

- Data Privacy and Security

- Transparency

- Fairness and Bias Mitigation

- Accountability

- Use Case Guidelines

- Ethical Considerations

- Monitoring and Auditing

- Training and Awareness

- Data Quality and Accuracy

But acceptable use policies are actually quite fickle things.

Many use policies emphasize privacy, transparency, and accountability (these are all important matters) – according to a 2023 meta-analysis of global policies.

However, not so many valued truthfulness, intellectual property, or children’s rights (which are much more important – even if they are harder to police).

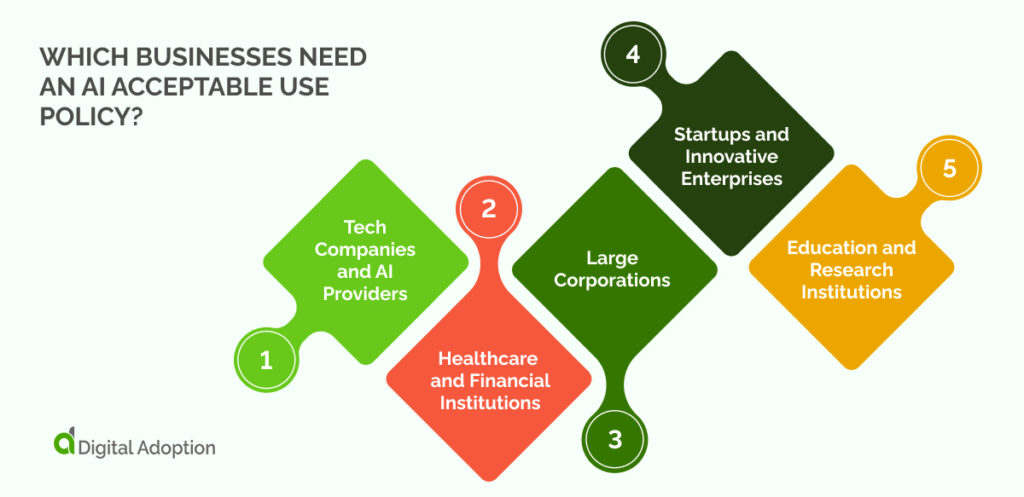

Which businesses need an AI Acceptable Use Policy?

Introducing an AI AUP should be a priority for a wide range of businesses, particularly those that heavily rely on or are influenced by artificial intelligence.

Here are some types of businesses that should prioritize the establishment of such policies:

Tech Companies and AI Providers

Tech companies, especially those providing AI services, should lead the way in implementing AI AUPs. These policies not only guide their internal operations but also set industry standards.

Healthcare and Financial Institutions

Businesses in the healthcare and financial sectors handle sensitive and highly regulated data. It is critical in these industries to safeguard patient health information and financial data, maintain compliance with strict regulations like HIPAA and GDPR, and ensure the ethical application of AI in decision-making processes.

Large Corporations

Large corporations with diverse operations and extensive data handling processes should establish AI AUP as a matter of priority. These policies can provide a framework for responsible AI usage, preventing unauthorized AI applications, and ensuring transparency, accountability, and compliance across all departments. They also serve to mitigate risks related to data breaches and operational disruptions.

Startups and Innovative Enterprises

Even smaller businesses and startups should prioritize the implementation of AI AUPs as they integrate AI into their operations. These policies help set clear boundaries for AI usage from the outset, preventing ethical and regulatory pitfalls as they grow and expand their AI-driven initiatives.

Education and Research Institutions

Educational institutions and research organizations should introduce AI AUPs to guide the responsible use of AI in teaching and research. These policies can help ensure that AI technologies are used ethically and that data privacy is protected when conducting research or deploying AI in educational settings.

In summary, AI Acceptable Use Policies are essential for a wide spectrum of businesses, but they are particularly critical for tech companies, healthcare and financial institutions, large corporations, startups, and educational institutions.

What are the business use cases for an AI Acceptable Use Policy?

At present, you’ll find there are three business-specific use cases for an AI usage policy. They are as follows:

AI Providers

Companies offering AI services, like ChatGPT, Google Bard, and Meta’s Llama, recognize the critical need for use policies.

These policies establish clear terms of use and ethical guidelines, such as those found in OpenAI’s usage policy, Google’s generative AI terms of service, and Meta’s Llama acceptable use policy.

They help maintain accountability and promote responsible AI usage, ensuring transparency and compliance with legal and ethical standards.

Third-party app developers

Prominent entities like Salesforce have introduced AI-acceptable use policies for their product development.

This proactive approach assures customers that their data will be handled with care and consideration.

Salesforce’s commitment to its August 2023 policy demonstrates a commitment to responsible AI practices, enhancing trust and accountability.

End-user corporate users

As businesses increasingly integrate AI into their operations, employees often explore AI applications independently, sometimes without proper regulation.

This has prompted the need for AI-acceptable use policies.

To avoid issues like unauthorized AI usage and data leaks, some companies have taken drastic measures, as seen when Samsung temporarily banned unauthorized AI applications following an internal data breach in May 2023.

Implementing an AI-acceptable use policy is a proactive solution to monitor and regulate AI usage within organizations, ensuring ethical, compliant, and secure AI practices.

AI Acceptable use and the ethics of AI tools

AI is a diverse landscape of emerging technology – and your company may need a more nuanced approach to the problems.

Fortunately, the field of AI ethics is helping businesses and governments to really understand the challenges.

The following resources will help to discover more about the use of AI technology.

- Some organizations are shedding light on the broader considerations of morality and ethics in AI. Organizations such as the Center for AI Safety have taken a pioneering role in developing comprehensive guidelines to address the ethical dimensions of AI. These guidelines tackle the fundamental questions that arise from the use and development of AI, establishing a foundation for responsible AI deployment.

- AI use is not identical across every business. So, domain-specific AI guidelines can help to focus your attention on the critical issues in your industry. Whether it’s AI in college admissions, legal practice, healthcare, or talent development, these applications come with their own set of ethical dilemmas. Industry bodies are starting to provide valuable insights into the evolving ethical considerations surrounding AI in these specialized contexts.

- Some organizations are keeping a close eye on how AI policy is developing as a business sub-discipline. For example, Algorithm Watch keeps a global inventory of AI ethics guidelines. Whatever your business, these resources can offer you make comparisons about how companies negotiate the inherent risks of AI.

AI Usage policies and the future of digital trust

In the journey to use AI responsibly, having an AI Acceptable Use Policy is essential, but it may not be the first thing you do. As we’ve seen, you must think carefully about the ethical challenges and opportunities that AI brings, even when those are not yet clear. By doing all of this, organizations can continue to build digital trust, which is crucial in using AI safely.