AI privacy concerns refer to the potential risks associated with using artificial intelligence technology, such as data breaches, unauthorized access to personal information, the amplification of harmful biases, and the generation of sensitive personal data.

Technology can be unpredictable, and its advancements can sometimes outpace our ability to plan how to use it safely.

This is especially true in an increasingly AI-driven world, where addressing privacy concerns is essential to protect individuals’ privacy rights while enjoying the benefits of AI technology.

AI privacy concerns are a great example of one of these IT strategic trends. The advent of ChatGPT, Google Bard, and other competitors has seen us wowed with their powerful features while simultaneously ignoring the large amounts of data they are collecting.

To confront data privacy concerns as part of its AI risk management, every enterprise must tackle them and maintain its reputation as an organization, safely protecting itself against data breaches.

To help you understand what AI privacy concerns are and how to address them, we will explore the following topics:

- How can AI be an invasion of privacy?

- What data does AI collect?

- What 5 types of AI can invade your privacy?

- How can you prevent AI from invading your privacy?

- What is the biggest concern regarding AI in the future?

- How can AI be an invasion of privacy?

- What data does AI collect?

- What laws address AI privacy concerns?

- What 5 types of AI can invade your privacy?

- How can you prevent AI from invading your privacy?

- What are the biggest future AI privacy concerns?

- Research the privacy policy before implementing a new AI tool

How can AI be an invasion of privacy?

ChatGPT and other forms of artificial intelligence collect information on how users communicate with their systems. Through a process called Training, AI uses this data collection to improve its systems and get smarter.

The information they collect includes what users say, what happened in past chats, how the AI system responds, or even photographic images of people’s faces if an organization uses facial recognition systems.

It’s vital to know that the folks at OpenAI, the group behind ChatGPT, take keeping info private and secure very seriously.

ChatGPT shows a message to remind users not to enter sensitive information before they begin a session to show they are protecting privacy.

Image: OpenAI

The company makes sure companies can’t link data back to specific people. They also keep the information quickly and have ways to keep it safe from being hacked or misused. Doing so helps them follow the rules about keeping data private.

What data does AI collect?

AI collects whatever data you feed it. It logs this information, such as personal details like your or a customer’s address, to learn and refine the service it offers users.

AI can only collect data you enter, so if there is data you don’t want AI to have, ensure you avoid entering it and don’t provide access to databases of sensitive data.

What laws address AI privacy concerns?

Regulations such as the European Union’s General Data Protection Regulation (GDPR) offer instructions on AI use and safeguard people’s personal data.

The GDPR insists that organizations treat personal data carefully, ensuring it stays secure, confidential, and used correctly.

Organizations must take suitable technical and organizational steps to guard personal data against unauthorized access, breaches, and cyber threats. Adhering to GDPR and similar privacy laws helps reduce the privacy concerns linked to AI systems.

The US is currently trying to build legislation to regulate the use of AI and privacy concerns around this relatively new technology. Until the US has its legislation, companies can base policy on GDPR.

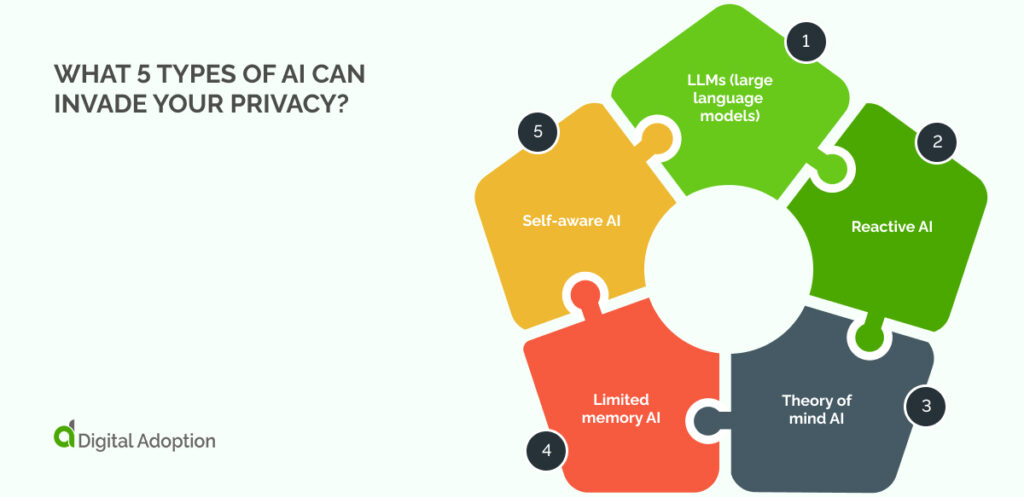

What 5 types of AI can invade your privacy?

Various forms of AI have the potential to encroach upon privacy boundaries.

1. LLMs (large language models)

Language models like LLMs (large language models) can inadvertently expose personal information through text analysis.

2. Reactive AI

Reactive AI, designed to respond to specific stimuli, might inadvertently access sensitive data without proper safeguards.

3. Theory of mind AI

Systems incorporating the theory of mind, aiming to understand human perspectives, raise concerns about handling private thoughts.

4. Limited memory AI

Limited memory AI may store and inadvertently disclose confidential data from past interactions.

5. Self-aware AI

The concept of self-aware AI introduces the potential for intentional privacy breaches as these systems might exploit vulnerabilities. Vigilance and ethical considerations are essential in developing and deploying AI to safeguard individuals’ privacy.

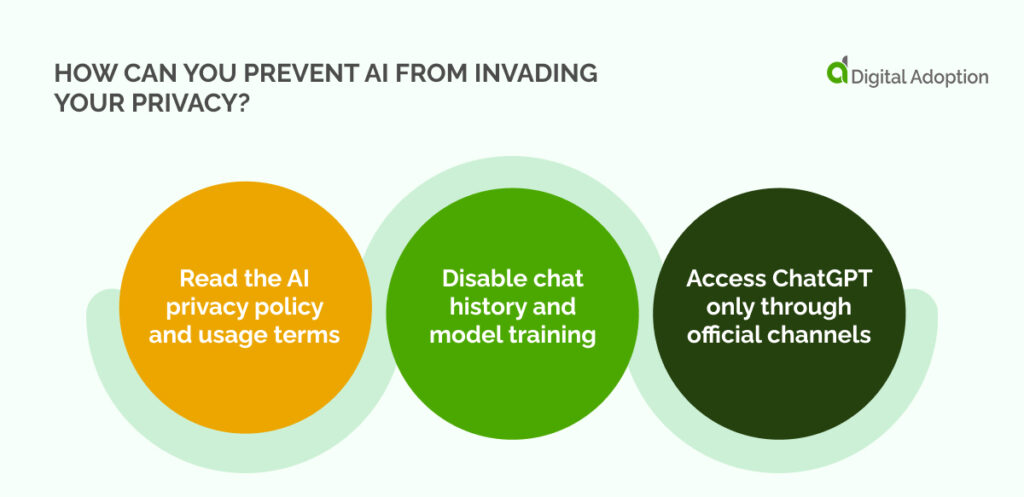

How can you prevent AI from invading your privacy?

Is ChatGPT Stealing Our Data? How to Stay Private When Using AI

1. Read the AI privacy policy and usage terms

Before giving any of your personal information to ChatGPT or other types of AI, it’s best to read and understand their policies. Bookmark the policies so you can refer to them often, as they can always change without warning.

See the links below for the privacy policies or usage terms for the four most popular LLM AI tools:

- BARD generative AI additional terms of service

- PaLM generative AI additional terms of service

- Claude terms and conditions

- ChatGPT privacy policy

2. Disable chat history and model training

You can prevent your conversations with ChatGPT from being saved to your chat history and opt out of your data being used to train OpenAI’s models.

To opt out of model training, click the three dots at the bottom of ChatGPT, go to Settings > Data controls, and click the “Chat history & training” switch to turn it off.

Even if you opt-out, your chats will still be stored on OpenAI’s servers and accessible to staff for 30 days to monitor for abuse.

3. Access ChatGPT only through official channels

To avoid supplying personal data to scammers or apps pretending to be ChatGPT, only use ChatGPT by visiting https://chat.openai.com or downloading the official ChatGPT app for iPhone, iPad, and Android.

What are the biggest future AI privacy concerns?

The most significant concern regarding AI’s future lies in its potential impact on employment and the job market. As AI technologies advance, there’s a growing apprehension about automation replacing human jobs across various industries.

The fear is about job displacement and the challenge of reskilling the workforce to adapt to the evolving job landscape, as shown by the risks of AI in artificial technology statistics.

Striking a balance between the benefits of AI-driven efficiency and the societal repercussions of unemployment is a critical challenge.

Addressing these significant privacy concerns requires proactive measures, such as robust education and training programs, to equip individuals with the skills needed in a technology-driven future.

Research the privacy policy before implementing a new AI tool

Before integrating a new AI tool, meticulous research into its usage terms is crucial to ensure you follow the best AI compliance practices.

Understanding the software’s limitations, data handling policies, and potential privacy implications ensures informed decision-making.

It also helps you weigh up protecting privacy against the potential benefits of AI as you plan your digital transformation considerations for the future.

Being well-versed in using safeguards against unexpected issues and ongoing monitoring of changes in usage terms and privacy policies for data collection promotes the responsible and ethical deployment of AI technologies.

![4 Best AI Chatbots for eCommerce [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/03/4-Best-AI-Chatbots-for-eCommerce-2025-img-300x146.jpg)

![13 Digital Transformation Enablers [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/02/13-Digital-Transformation-Enablers-2025-img-300x146.jpg)

![4 Best AI Chatbots for eCommerce [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/03/4-Best-AI-Chatbots-for-eCommerce-2025-img.jpg)