AI adoption is increasing, and it is making waves across industries for its impressive capabilities of performing human-level intelligent actions. Large language models and generative AI rely on huge amounts of pre-training data to operate.

AI engineers are now realising that this data can be repurposed to enable these models to complete more targeted and complex tasks.

Prompt engineers have noticed this and are hoping to leverage this untapped potential. Engineers are turning to meta-prompting to develop reliable and accurate AI. This prompt design technique involves creating instructions that guide LLMs in generating more targeted prompts.

This article will delve into meta-prompting, a powerful AI technique. We’ll examine its unique approach, provide illustrative examples, and explore practical applications. By the end, you’ll grasp its potential and learn how to incorporate meta-prompting in your AI-driven projects.

What is meta-prompting?

Meta-prompting is a technique in prompt engineering where instructions are designed to help large language models (LLMs) create more precise and focused prompts.

It provides key information, examples, and context to build prompt components. These include things like persona, rules, tasks, and actions. This helps the LLM develop logic for multi-step tasks.

Additional instructions can improve LLM responses. Each new round of prompts strengthens the model’s logic, leading to more consistent outputs.

This approach is a game-changer for AI businesses. It allows them to get targeted results without the high costs of specialized solutions.

By using meta-prompting effectively, businesses can more economically leverage the flexibility of LLMs for various applications.

How does meta-prompting work?

Meta-prompting leverages an LLM’s natural language understanding (NLU) and natural language processing (NLP) capabilities to create structured prompts. This involves generating an initial set of instructions that guide the model toward producing a final, more tailored prompt.

The process begins by establishing clear rules, tasks, and actions that the LLM should follow. By organizing these elements, the model is better equipped to handle multi-step tasks and produce consistent, targeted results.

With enough examples and structured guidance, the prompt design process becomes more automated, allowing users to achieve focused outputs. This method enables pre-trained models to adapt to tasks beyond their original design, offering a flexible framework that businesses can use for various applications.

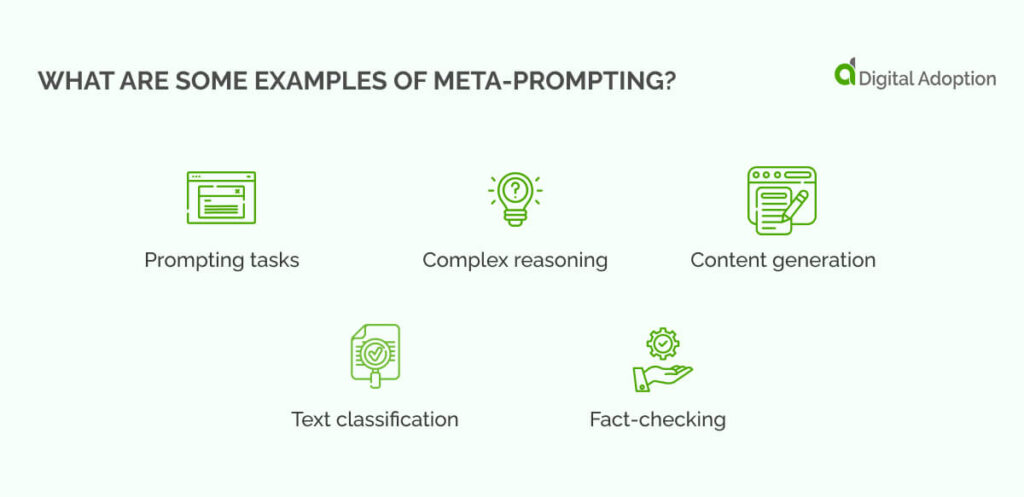

What are some examples of meta-prompting?

Let’s look at some real-world uses of meta-prompting. These examples show how it can be used in different areas.

Prompting tasks

Meta-prompting for tasks guides the AI through step-by-step processes with clear instructions.

A good task automation prompt might start with, “List the steps to do a detailed market analysis.” Then, the model can be asked to refine the process: “Break down each step and give examples of tools or data sources.”

This approach ensures the AI fully covers the task by working on scope and depth. It makes the output more useful and aligned with the user’s wants.

Complex reasoning

In complex reasoning, meta-prompting guides AI through problems in a logical way.

An example might start with, “Evaluate how climate change affects farming economically.” After the first answer, the meta-prompt could ask, “Now, compare short-term and long-term effects and suggest ways to reduce them.”

Structuring prompts to build on prior thinking allows AI to process complex ideas fully. This approach produces outputs showing deeper, multi-dimensional understanding.

Content generation

For content creation, meta-prompting uses step-by-step refinement to improve quality and relevance. An example might start with, “Write a 300-word article about the future of electric cars.”

Once the draft is done, the meta-prompt could ask, “Expand the part about battery tech advances, including recent breakthroughs.”

This method ensures that AI-generated content evolves to meet specific standards. It refines based on focused follow-ups to include precise, valuable details. The process also ensures consistency and alignment with the intended output.

Text classification

Meta-prompting for text classification guides AI through nuanced categorization tasks. A practical example might start with, “Group these news articles by topic: politics, technology, and healthcare.”

The meta-prompt could then ask, “For each group, explain the key factors that decided the categorization.”

This step-by-step prompting enhances the AI’s ability to label text correctly and explain its reasoning, helping ensure greater transparency and accuracy in its output.

Fact-checking

In fact-checking, meta-prompting can direct the AI to verify claims against reliable sources.

For instance, a starting prompt could be, “Check if this statement is true: ‘Global carbon emissions have decreased by 10% in the last decade.'” After the initial check, a meta-prompt might follow with, “Cite specific data sources or studies to support or refute this claim.”

This process ensures that the AI answers with verifiable, credible information, which improves its fact-checking abilities.

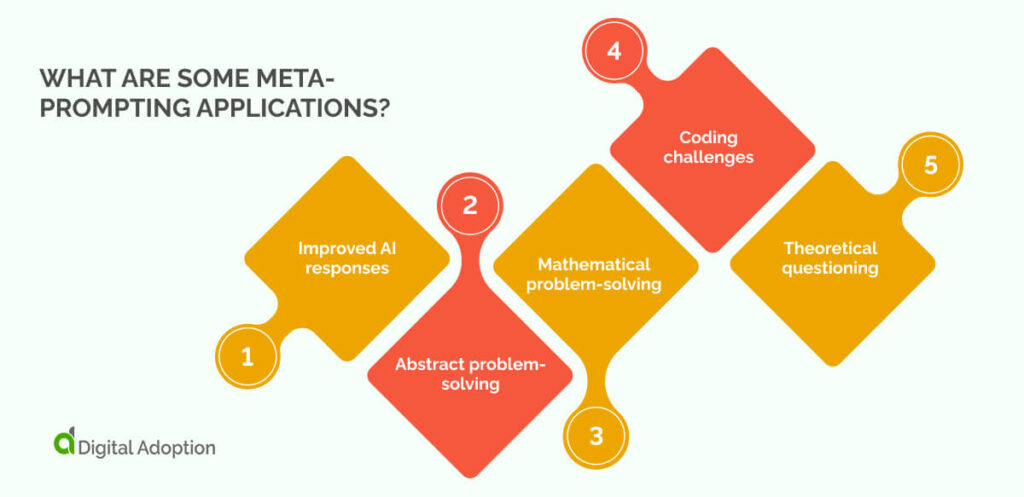

What are some meta-prompting applications?

Now that we’ve seen how to create a meta prompt with examples, let’s explore some common uses of this method.

Improved AI responses

Meta-prompting improves AI responses by structuring questions or tasks to optimize the output. Through carefully designed prompts, the AI can better understand the nuances of a query, leading to more accurate, context-rich answers.

For example, AI systems can better match user expectations by framing a request with clear instructions or context. This improvement in response quality is especially valuable in areas like customer service, content creation, and tech support, where precision and relevance are crucial.

Abstract problem-solving

Meta-prompting encourages AI systems to think beyond usual solutions, promoting creative and abstract problem-solving. By providing open-ended, exploratory prompts, users can guide AI to offer unique solutions that may not follow traditional patterns.

This ability is particularly useful in areas like strategic planning, brainstorming, and innovation, where new thinking can provide an edge. With meta-prompting, AI systems can explore new approaches and even generate insights that human operators may not have considered.

Mathematical problem-solving

In math contexts, meta-prompting can help break down complex problems into manageable steps. By guiding the AI with structured prompts, users can enable the system to solve problems that require a deep understanding of math principles.

For instance, a prompt like: “Provide a step-by-step explanation for solving quadratic equations using the quadratic formula” ensures a systematic approach. This can be highly beneficial in educational settings, tutoring, or technical research, where clear and precise answers are necessary.

Coding challenges

Meta-prompting is valuable for addressing coding challenges, from writing new code to debugging and optimizing existing solutions. Users can specify the programming language, desired output, and problem context to guide AI systems in generating effective code snippets.

For example, a prompt such as “Write a Python script to sort a list of integers in descending order” helps focus the AI’s response on the task. This ability to assist in coding can significantly reduce development time and enhance software quality.

Theoretical questioning

Meta-prompting can also help AI engage with theoretical questions, allowing for deeper, more reflective responses. By prompting the system with carefully framed hypotheses or abstract ideas, users can guide the AI to explore philosophical, scientific, or conceptual queries.

This is particularly useful in academic research, strategic thinking, or speculative analysis, where theoretical exploration is key to advancing understanding. Meta-prompting thus helps AI tackle complex theoretical scenarios with greater depth and nuance.

Meta-prompting vs. zero-shot prompting vs. prompt chaining

meta-prompting, zero-shot prompting, and prompt chaining each offer unique approaches to leveraging AI capabilities.

Let’s take a closer look:

Meta-prompting

Meta-prompting enhances response accuracy by guiding the AI through detailed, strategically designed prompts. This allows for more contextually aware and creative outputs. It focuses on refining the interaction to better meet user expectations.

Zero-shot prompting

Zero-shot prompting requires no prior task-specific training or context. It taps into the AI’s general knowledge base to respond to a prompt for the first time, making it ideal for broad, unspecialized tasks but potentially less precise in niche scenarios.

Prompt chaining

Prompt chaining involves a sequence of interconnected prompts to solve more complex tasks in stages. Each response informs the next, allowing for deeper problem-solving. It is particularly useful for multi-step tasks that require comprehensive understanding but can be more time-consuming due to its iterative nature.

Each method has strengths depending on the task’s complexity, specificity, and desired outcome.

Pushing boundaries with meta-prompting

Meta-prompting and other prompt engineering techniques are still new. These techniques are testing how LLMs work.

It’s not yet clear if these solutions can perform tasks well and without error. This will depend on how deep the prompting techniques are and, more importantly, how good the data these models are trained on is.

Model outputs can become skewed and unusable if the training data is not verifiable, accurate, or free from bias. LLMs can also produce hallucinations or generate incorrect or misleading information.

As it gets easier to adopt AI solutions, rushing to use them without ethical development frameworks can cause problems.

Prompt engineering will be needed to ensure that businesses use LLM solutions effectively while balancing ethical and responsible development.

This will help companies outpace competitors while having the means to tackle current and future problems through more reliable AI.

People Also Ask

-

What are the advantages of meta-prompting?The advantages of meta-prompting include: Enhances AIs self-reflection and adaptive reasoning Allows dynamic adjustment of AI behavior during tasks Enables meta-level control over AIs problem-solving approach Improves AIs ability to handle ambiguous or abstract queries Facilitates more natural, context-aware AI interactions

-

What are the limitations of meta-prompting?Some limitations of meta-promoting are as follows: Requires expertise in crafting effective meta-level instructions May increase the complexity and processing time for AI responses Can lead to unexpected outcomes if meta-instructions conflict Potential for recursive loops in poorly designed meta-prompts

-

What are some key characteristics of meta-prompting?Meta-prompting key characteristics include: Provides high-level instructions to guide AIs reasoning process Enables dynamic adjustment of AI behavior during task execution Allows for meta-cognitive abilities in AI, like self-reflection Facilitates multi-step, hierarchical problem-solving approaches Enhances AIs ability to handle abstract or conceptual tasks