Machine learning (ML) technologies unveil an exciting new layer of modern computational capabilities.

Decades of research and development in both computer and data sciences have led to the rise of machine-learning models. These ML models can now emulate a high degree of intelligence similar to that of humans to perform complex tasks and actions.

This subset of artificial intelligence (AI) is taking the world by storm. Its diverse applications mean every industry can benefit from ML solutions. AI in healthcare is being used for more accurate diagnoses and disease predictions. In manufacturing, it’s optimizing supply chains and improving quality control processes.

Because ML’s potential applications are so diverse, blanket solutions aren’t adequate for addressing specific objectives. Naturally, understanding the different types of ML models then becomes key. ML falls under various categories and sub-categories, each with particular design intentions.

This article delves into the world of machine learning models. We’ll dissect their core types and unveil the subtle distinction between models and algorithms.

What is a machine learning model?

Machine learning (ML) is a subset of AI that leverages data and algorithms to improve its ability to perform specific tasks. It analyzes vast datasets and identifies patterns to make data-driven predictions.

ML algorithms act as blueprints, guiding the construction of ML models. These models are then “trained” on massive amounts of data, learning to perform a designated function.

Training data is essential for developing well-defined machine learning (ML) models. Different problem types require different training approaches. For example, classification and regression models typically use supervised learning. This is where the model is trained on labeled data. Conversely, unsupervised learning models learn patterns from data without labeled responses.

Supervised ML algorithms can be taught how to infer and generate predictive outcomes from labeled data. Labeled data has been categorized to help the algorithm understand and map input features to the correct output labels.

This process involves feeding the model with numerous examples where the input data and output response are known. This allows the algorithm to learn patterns and relationships between the data.

ML learning techniques include classification and regression. Classification aims to categorize data into predefined classes. In contrast, regression aims to predict continuous numerical values. This allows data scientists to modify different algorithms to achieve better model performance.

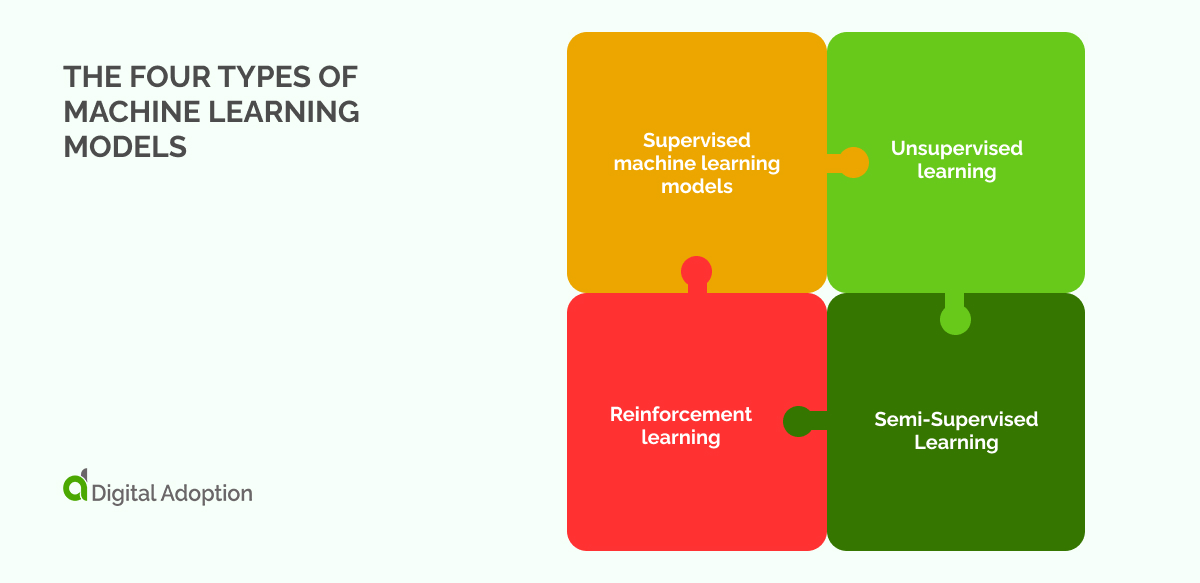

What are the four types of machine learning models?

We now understand what machine learning models are and how they are developed. Let’s explore four types of machine learning models primarily pursued by data scientists.

Machine learning (ML) algorithms can be developed in various ways. It can be supervised, where systems are trained on labeled and structured data. It can also involve unsupervised learning, where the model learns from unstructured data without prior labeling.

The diversity of available ML models means sourcing the right solution requires a strategic approach. There’s no one-size-fits-all model. Stakeholders must weigh their priorities and ensure the chosen solution balances these demands.

ML models tend to perform better as more data and iterations of training are introduced. Continuous training improves model accuracy as it adjusts to new data and patterns. This allows models to adapt and refine their predictions, leading to more targeted and accurate outputs over time.

- Supervised machine learning models

Supervised machine learning algorithms are geared towards solutions that address two main problems. These are classification and regression.

Almost every data-driven problem involves classification or regression. Classification consists of designing systems to sort information into tangible categories. The knowledge gained is then recycled to identify and label new data correctly.

Regression algorithms predict continuous outcomes. For instance, a real estate regression model can estimate a property’s value based on location, size, and market trends.

Let’s explore how supervised ML models are designed to address these two pain points.

Classification

ML algorithms designed for classification are trained to identify data and assign them to predefined classes.

These algorithms learn from labeled data. They correctly recognize and sort information into identifiable categories, e.g., blue, child, dog, etc. After being trained to associate data correctly, classification algorithms can generate discrete outcomes, such as yes/no answers. This is incredibly useful for supporting decision-making processes in real-world scenarios.

Take a radiology technology used by healthcare professionals. Say they need to identify the presence of a specific disease from X-ray images. By training on labeled images, the algorithm learns to categorize new images as either containing the disease or not. This aids radiologists in making quicker, more accurate diagnoses.

Common classification machine learning algorithms used by data scientists include:

Naïve Bayes: This algorithm assesses probabilities to categorize data swiftly. It excels in text analysis and email sorting and helps predict categories based on the likelihood of specific features. Its speed and efficiency make Naïve Bayes a popular choice for real-time applications. These include spam filtering and sentiment analysis.

Support Vector Machines (SVMs): SVMs precisely differentiate data categories. Their ability to craft optimal hyperplanes ensures they excel in complex environments, making them indispensable for image recognition and bioinformatics. Their strength is handling high-dimensional data, enabling clear boundaries and accurate classifications.

Regression

Regression involves generating continuous predictions and numerical values. It assesses patterns or influences between a dependent variable and one or more independent variables, such as how advertising spend, quality, and market conditions impact sales revenue.

This method produces quantifiable outputs that can identify hidden trends. This makes it especially useful in high-stakes operations. Operations such as financial forecasting or pharmaceutical inventory management.

Common regression machine learning algorithms used by data scientists include:

Linear Regression: Linear regression establishes a clear, straight-line relationship between variables, making it ideal for uncovering basic trends and generating initial insights.

Gradient Boosting: Gradient boosting builds a series of models, each one learning from the errors of the last. This results in highly accurate and detailed predictions, perfect for complex scenarios.

Random Forest: Random forest tackles complexity with a powerful ensemble approach. It combines multiple decision trees, each offering a unique perspective on the data. This reduces the risk of overfitting and allows it to handle large datasets with intricate relationships.

- Unsupervised learning

Unsupervised learning makes the exciting potential of autonomous data analysis possible. Unlike supervised learning, where data is labeled, unsupervised algorithms are clustered together. They do this by deriving patterns and structures from unlabeled data.

It operates independently, ingesting data to create inherent relationships and patterns. These models are useful for tasks like customer segmentation and anomaly detection. K-means clustering excels at automatically grouping large datasets. It analyzes customer data based on shared characteristics like purchase history or demographics. This helps unveil hidden customer segments that traditional methods might miss. This new visibility enables more personalized marketing campaigns and experiences.

Common unsupervised machine learning algorithms used by data scientists include:

K-means clustering: This algorithm divides the data into predefined clusters based on similarities between data. It iteratively calculates the center of each cluster and assigns data points to the closest centroid. This process continues until the assignments stabilize, revealing distinct groups within the data.

Hierarchical clustering: Hierarchical clustering builds a hierarchy of nested clusters. It starts with individual data points and progressively merges them based on similarity. This approach allows data scientists to explore the data at various levels of granularity. It identifies both high-level groupings and finer sub-clusters.

Gaussian Mixture Models: This technique assumes that the data originates from a blend of several statistical distributions. GMMs estimate the parameters of these underlying distributions. This enables them to segment the data into distinct groups based on the probability of a data point belonging to each distribution. This approach is particularly useful for identifying hidden patterns within complex datasets.

3. Semi-Supervised Learning

Semi-supervised learning merges the strengths of supervised and unsupervised methodologies. A dual approach helps maximize the use of available data.

These models combine a small set of labeled data with a much larger pool of unlabeled data. This significantly boosts model performance. Semi-supervised learning defines the model using a vast set of unlabeled images. This technique unlocks higher accuracy and generalization. Methods to achieve this include self-training, co-training, and graph-based methods.

Beyond optimizing resource use, semi-supervised learning holds immense potential for various fields. These include NLP, fraud detection, and bioinformatics—areas where labeled data is often scarce.

4. Reinforcement learning

Through interactions with an environment, these models learn to make decisions that maximize cumulative rewards. This method mirrors behavioral psychology, where trial and error guides actions, and feedback reinforces desirable behaviors.

Central to this approach is the Markov Decision Process (MDP). This framework models the environment through states, actions, and rewards. Techniques like Q-learning and policy gradient methods equip the model to develop optimal strategies.

The applications of reinforcement learning are vast. They extend from gameplay to complex tasks like robotic control and autonomous driving. This adaptability and potential for decision-making under uncertainty position reinforcement learning as a key driver of innovation.

How do machine learning models differ from machine learning algorithms?

ML models and algorithms terminology often overlap but should be understood as distinct.

ML models are the output of learning algorithms after training with data. They are the final product, representing what the algorithm has been “taught.”

The algorithms are integral during the training phase, contributing to the ultimate form and function of the final ML models.

For example, enterprise-level AI solutions could only rely on a complete ML model with consistent operational parameters. These parameters signify that the models have been optimized to maintain stable performance.

Machine learning models going forward

This article explores machine learning models. By this point, readers should understand the different types of ML models innovating sectors like healthcare, finance, and manufacturing. However, achieving widespread adoption for wider use cases requires addressing some key challenges.

Data privacy is key, as increasingly sophisticated models run on sensitive data. Acute frameworks and explainable AI are crucial to ensure responsible use and minimize concerns. Bias is another concern, with skewed datasets potentially leading to unfair outcomes.

Mitigating bias requires diverse datasets, reinforcement training, and ongoing monitoring. ML models can also generate seemingly plausible but incorrect outputs. These “hallucinations” pose another challenge.

If we hand the baton of responsibility over to AI for high-risk tasks, it must be done with finesse. Scenarios where ML models confidently make inaccurate decisions in a medical emergency would be consequential.

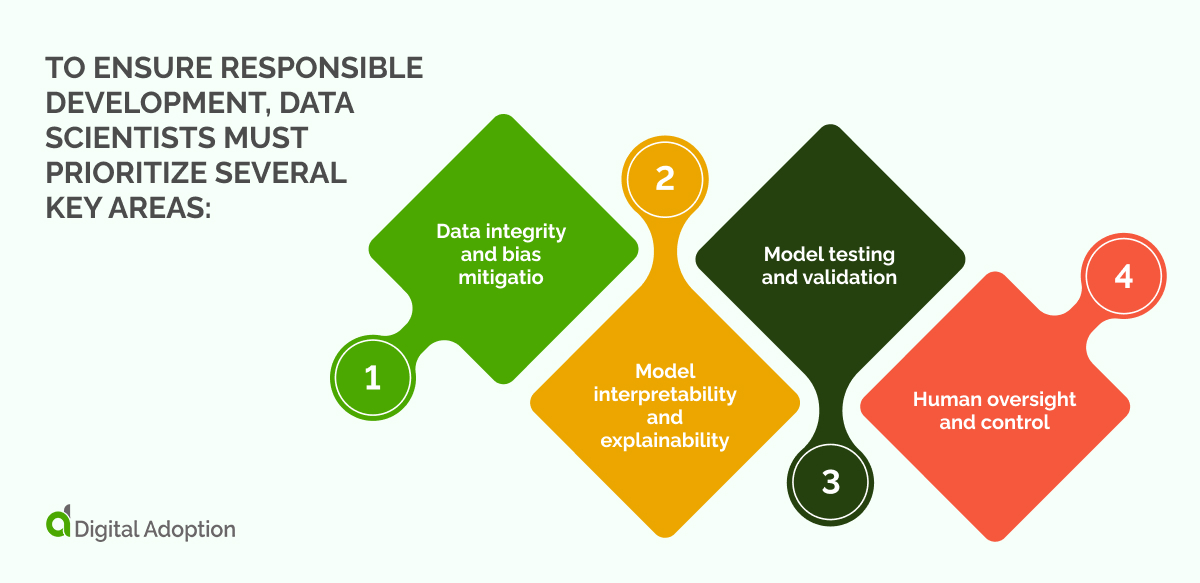

To ensure responsible development, data scientists must prioritize several key areas:

- Data integrity and bias mitigation: Flawed or biased data can lead to discriminatory or erroneous outcomes. Data scientists must prioritize the use of high-quality, diverse datasets. This means implementing techniques to identify and mitigate bias within the data itself.

- Model interpretability and explainability: The inner workings of complex models can be opaque. Data scientists need to develop methods to make models more interpretable. This allows humans to understand the rationale behind a model’s decision, particularly in critical situations.

- Model testing and validation: Thorough testing and validation are essential to assess a model’s performance under various conditions, including edge cases and scenarios with limited data.

- Human oversight and control: Even the most advanced models are not error-free. Human oversight and control mechanisms must be implemented to ensure AI recommendations are carefully reviewed and validated before being acted upon in high-risk situations.

According to Statista, the machine learning market is projected to reach nearly $80 billion in value by 2024 and exceed $500 billion by 2030.

Going forward, a collaborative effort is needed between data scientists, ethicists, policymakers, and the public. This will mitigate unseen risks and pave the way for the responsible integration of AI.

FACT CHECKED

FACT CHECKED